| |

|

SLM

Table of Contents

|

|

|

Service Level Management in the form of Service Level Agreements, Service Catalogues, Schedules of Intent, Contracts or even licences are more important than the actual documents suggest. Service Level Management is a core module, and is probably the first point of reference when implementing ITIL within an organization. Unless regularly monitored and reviewed, under performance or undisciplined expansion can choke the effectiveness of Management and jeopardize the whole organization.

This process needs to be re-written for confomance with ITIL Version 3. This involves removal of Service Catalog, Service Reporting and Service Improvement functions/sub-processes to other Version 3 processes..

More...

|

|

|

Introduction to Service Level Management

Service Level Management is a process under Service Design in the ITIL Version 3 concept.

| The most overlooked process, the Cinderella of all Processes, is the Service Level Management (SLM). Service Level Management is the process of planning, coordinating, drafting, agreeing, monitoring and reporting on Service Level Agreements (SLAs), and the ongoing review of actual service achievement to ensure that the required and cost-justifiable quality of service is maintained and improved.

Darreck Lisle, The Overlooked Process - SLM, Beter Management.com

|

In an ideal setting, the levels and kinds of IT service provided are perfectly aligned with business requirements. Few organizations, however, have the organizational capabilities to perfectly align all aspect of the organization's operations - IT included - with strategic goals and intentsN. As such the organization should carefully construct and institutionalize structures and processes to facilitate alignment.

This exercise will never be undertaken in the absence of existing governance and communication structures. Consequently, most organizations will start by understanding their AS-IS situation with regards to service offerings. Developing the Service Catalogue is an important first step in promoting widespread understanding of service offerings.

The TO-BE model is then determined in negotiation with business leaders, and, the SLA is then developed on the basis of these requirements and priorities. Service variations and nuances are determined and performance measured established and agreed upon. Services are monitored and measured according to the agreed-on SLA criteria in order to ensure compliance with it. Service level monitoring entails continual measurement of mutually agreed–upon service-level thresholds and the initiation of corrective actions if the thresholds are breached.

Service Level Management allows the IT department to meet business expectations and opens a dialog to confirm these expectations.

![[To top of Page]](../images/up.gif)

Service Level Management

| Proactive service level management is a combination of structuring the right organization, ensuring that the staff have appropriate skills, defining and implementing the right methodology and procedures, and using an appropriate management solution to monitor and improve service quality.

Sturm, Morris, Jander, Foundations of Service Level Management, SAMS, 2000, ISBN: 0672317435, p.182

|

Many organizations have embraced ITIL as a framework for improving IT Service Management processes. These organizations traditionally start by listing a number of 'gaps' between current and ITIL best practices and recommend improvements to close these gaps.

Key to closing those gaps are the following kinds of measures:

- gaining some "quick wins" (ie., return on investment) through using ITIL practices. In most ITIL implementation-scenarios quick wins are obtained through the introduction of processes requiring modest levels of organizational maturity (as defined in CMMI - see Glossary),

- the employment of standard, defined and managed processes to reduce service variations in the delivery of IT services,

- on performance reporting as a key enabler of continuous service improvement,

- on customer communications to further their opinion of services delivered,

- on marketing of IT Service Provider services as a vehicle for leveraging the original ITIL investment, gaining recognition for IT Service Provider and further disseminating the benefits throughout the organization.

While each of the above ITIL processes will permit the achievement of modest benefits, greater rewards can be sought by integrating the separate processes into an overall service management concept with service level management (SLM) as the integrating concept. SLM highlights the point that the level at which services are provided is geared to the business' need for them. SLM puts the relationship between providers and consumer of IT services on a more professional basis - describing service offering, their costs and continuing performance in negotiated agreements which are continually monitored for compliance. Accurately defining the relationship between IT Service Provider and its' line of business (LOB) partners allows the LOB IT Provider to better describe their IT service provisioning to their customers - the ultimate payer of products and services.

Critical Success Factors

- The reliance of critical business processes on IT is defined and is covered by SLAs,

- There are governance structures and a service culture within the organization which can be exploited to implement SLM concepts and approaches,

- IT provider demonstrates its' commitment through Service Catalogue and OLOs statements,

- OLOs and SLOs are expressed in end-user business terms,

- Measures meet the metrics test outlined in Measurement,

- Root cause analysis is performed when service levels breaches occur,

- Skills and tools are available to provide useful and timely service level information,

- There is a demonstrable relationship between service provisioning and the costs of providing services,

- A system for tracking and following individual changes to services is available.

![[To top of Page]](../images/up.gif)

Scope

The Service Level Management (SLM) process manages the amount and quality of IT service delivered in accordance with established agreements amongst all participants in the service delivery chain. SLM is a key process in ensuring alignment between IT service delivery and business goals and objectives at agreed-upon costs.

In Scope

- Services are described in a service catalogue. One service may form part of another service. The description of each service is the responsibility of the Service Owner. The description is maintained as a Configuration Item (CI) and managed through Change Control procedures. Service Provisioning is described at offered levels, with a service chain describing participants and their obligations in the provision of the overall service. The costs and charge-back methods are described for the general offering and for all service variations.

- For direct customers Service Levels are negotiated and described as Service Level Requirements (SLRs). The identification of Service Level Requirements (SLRs) is based on the existing and anticipated technology needs of supported corporate lines of business. The results of the negotiation of these services are recorded in Service Level Agreements (SLAs).

- For supported lines of business (LOB), an Operational Level Agreement (OLA) describes the provision of all IT services. The description of service chains associated with services described in the Service Catalogue identifies where OLAs are required in order that participants in that service chain understand their obligations towards meeting the overall performance goals for the service.

- External Service providers will have their expectations and obligations detailed in Underpinning Contracts (UCs). OLAs and UCs have Service Owners responsible for their integrity and updating and are maintained as Configuration Items (CIs) under Change Control.

- Ongoing negotiation and liaison with customers on service matters through Account representatives.

- Monitoring and reporting of performance against the service and operational level objectives (SLO, OLO)s on a real-time and periodic basis by the account representative.

- The coordination of agent deployment throughout the infrastructure to provide performance data on CIs within the IT provider's sphere of responsibility.

Usually Excluded

- SLAs negotiated by the LOB IT service provider are within their respective domains though the OLA negotiation with the IT service provider will provide important performance descriptions which should be reflected in these documents.

- Day-to-day service provision is provided by operational units.

- Service planning is traditionally covered by other bodies. Account representatives should remain aware of these endeavors in order to keep their portfolio LOBs informed on happening and ensure that LOB interests are represented in the planning deliberations.

- Customer Relationship Management (CRM) as a disciplined, quantitatively measured process of maintaining relevant information on customer habits is the domain of the primary LOB. The application of CRM concepts to the maintenance of relationships between the iT service provider and its' Customers is considered beyond the scope of this Service Level Management implementation

Relationship to Other Processes

Configuration Management

Service Catalogue entries, SLAs, OLAs and Underpinning Contracts are all maintained as part of a Process Library and recorded as Configuration Items (CIs) in the CMDB.

Change Management

Service Catalogue entries, SLAs, OLAs and Underpinning Contracts are subject to Change Management procedures.

Service Request

The Service Request process logs service access. The service Catalogue acts as a central point of reference for the request process and outlines how services should be accessed, expectations for service delivery and what actions can be invoked in order to escalate un fulfilled requests.

Availability and Service Continuity Management

Availability management and service continuity management are closely related as both processes strive to mitigate risks to the availability of IT Services. The prime focus of availability management is handling the routine risks to availability that can be expected on a day-to-day basis or risks for which the cost of an availability solution is justified. Service continuity management caters to more extreme, expensive, or unanticipated availability risks.

In efforts for an organization to determine the requirements that it may need to address in a service continuity effort, it must first understand the business impact if a process, person, or technology fails, what will happen. This examination is called a business impact analysis. From this analysis, closer attention is then applied to identify impact points, and a business impact assessment is done. This assessment identifies the actual risks that a specific process may face. The results of both these reports are then utilized to mitigate the risk with sound strategies.

A key driver in determining the requirements is how much the company stands to lose as a result of a disaster or other incident and the speed of escalation of these losses. The purpose of a business impact analysis is to assess this through identifying:

- Critical business processes

- The potential damage or loss that may be caused to the organization as a result of a disruption to critical business processes

- The form that the damage or loss may take including lost income, additional costs, damage to reputation, loss of goodwill, and loss of competitive advantage

- How the degree of damage or loss is likely to escalate after an incident

- The staffing, skills, facilities, and services necessary to enable critical and essential business processes to continue operating at a minimum acceptable level

- The time within which minimum levels of staffing, facilities, and services should be recovered to normal levels

- The time within which all required business processes and supporting staff, facilities, and services should be fully recovered.

The last three items provide drivers for the level of mechanisms that need to be considered or deployed. Once presented with these options, the organization may decide that lower levels of service or increased delays are more acceptable based upon a cost/benefit analysis. The level needed is recorded and agreed upon within an SLA.

These definitions and their components enable the mapping of critical service, application, and infrastructure components, thus helping to identify the elements that a organization needs to provide. The requirements are ranked and the associated elements confirmed and priorized in terms of risk assessment/reduction and recovery planning.

Impacts are measured against particular scenarios for each customer such as an inability to receive raw material or deliver products or services.

- Impacts are measured against the scenarios and typically fall into one or more of the following categories:

- Failure to achieve agreed service levels with the customer(s)

- Financial loss

- Immediate and long-term loss of market share

- Breach of law, regulations, or standards

- Risk to personal safety

- Political, corporate, or personal embarrassment

- Loss of goodwill, credibility, image, and/or reputation

- Loss of operational capability, for example in a command and control environment

This process enables a company to understand at what point the unavailability of a service would become unacceptable.

Capacity Management

Capacity management ensures that the system can meet both existing and future capacity requirements for the users. If a system cannot meet the needs of the organization, it is of little use. The present and future capacity requirements for a service are captured in SLAs. These requirements are broken down into individual OLAs for each layer in the IT infrastructure.

Financial Management

While many IT services are important to the business, few are so critical that they must be available "at any cost." Financial management acts as a filter,, ensuring that the needs of the users justify the cost of the solution required to meet them.

Any ITIL Discipline may constitute a unique service which is described in the Service Catalogue and may form part of SLAs and OLAs.

![[To top of Page]](../images/up.gif)

- the IT provider should publish its service offerings in catalogues describing services, variations, costs, expected performance and the obligations of all participants in the provision of the service.

- The level of current services should be delineated in corporate client SLAs and OLAs between the primary IT service provider and its' current customer base.

- Service variations should be negotiated between the primary IT service provider and lines of business contracting for the variations. The costs associated with standard services, including costing algorithms will be negotiated and detailed in the Service Catalogue. The costs of variations will be detailed in the same Catalogue and tracked closely.

- The performance and behavior expectations of all internal participants involved in providing a service should be negotiated amongst the participants and detailed in Operational Level Agreements between senior leadership and operational units.

- The expectations of all external service providers should be maintained in Underpinning Contracts (UCs) maintained by Service Level Management on behalf of the iT service provider.

- OLAs and UCs should be constructed in a manner which facilitates the collection and reporting of end-to-end service performance. They will be maintained as Configurations Items (CIs) and recorded in the CMDB. Changes to them will be subject to Change Management procedures.

- A Service Level Manager should monitor service delivery against established Service Level Objectives and report against them on a monthly basis. The Service Level Manager should attempt to mitigate the adverse impacts of service breaches.

- There should be methods to escalate issues which are within established threshold (eg., 80%) of constituting a breach of a negotiated service objective as set out in a SLO,

- Software monitoring tools and agents designed to provide metrics on performance should be an explicit consideration for new software introduced into the production environment. Consideration will be given to developing "synthetic transactions" for applications not having management capabilities.

- Regular reviews of the effectiveness of Service Level Management will be conducted.

- Ownership of SLAs with corporate customers should be by account representatives who act as the primary customer point of contact for negotiation and issue management.

- There should be provision to log and classify a service complaints which should be treated as an Incident with a Category="Service Complaint". Service complaints should be automatically escalated to an account representative responsible for the originating source's organization. Complaints will be monitored and reported.

- All agreements (SLA, OLA, UC) and catalogues should be easily available to employees through an intranet site.

- Ongoing performance, as expressed in Service Level Objectives (SLOs) and Operational Level Objectives (OLOs), should be continually monitored and regularly reported.

- Multiple agents that duplicate agent functionality (devised to provide service level management information), should be avoided wherever possible. This can be achieved by careful coordination across management disciplines such as network management, database administration, and systems management. Each management area should be responsible for controlling agent deployment within its' area. Policies and procedures will be developed for deploying and managing distributed agents.

![[To top of Page]](../images/up.gif)

To be developed

![[To top of Page]](../images/up.gif)

| Service Level Management is the name given to the processes of planning, co-ordinating, drafting, agreeing, monitoring and reporting on SLAs, and the on-going review of service achievements to ensure that the required and cost-justifiable service quality is maintained and gradually improved. SLAs provide the basis for managing the relationship between the provider and the Customer.

IT Service Delivery, Section 4.1.4

|

Key elements in this definition are:

- An SLA is the primary document and the contractual agreement which outlines how, where, at what level and to whom IT services are to be provided to further business goals and objectives,

- How well the agreement is being fulfilled is determined through an ongoing processes of performance reporting

- The objective is to improve service provision over time

An Operational Level Agreement (OLA) is an agreement between participants in the provision of that service. It details the characteristics of the service "hand-offs" between participants. While an IT service provider will have direct customers as represented by the corporate service personnel to whom it provides IT services, its' primary customer base is to business lines who provide IT services to a client base (ie. their customers). In this relationship, an SLA would define the provision of services between the business product IT provider and the end user of the product. The primary IT provider supplies specific corporate services to any LOB IT provider. This relationship is defined by an Operational Level Agreement (OLA). The definition of these individual relationships can be defined in a standard OLA with each LOB being a signatory.

The focused emphasis on performance reporting distinguishes SLM from other ITIL processes. It implies "quantitative management" of service provision - a level 4 activity as described by Capability Maturity Model Integration (CMMI). Most ITIL processes are described for implementation somewhere between levels 2 (repeatable) and 3 (defined) processes. Therefore, fully implementing SLM practices requires more mature supporting processes than do other ITIL areas such as incident, change, problem and release. Key to providing SLM to primary service providers are Operational Level Objectives (OLOs). OLOs cite the agreed upon levels of service which IT Service Provider would promise its' LOB customers.

Given the difficulty of adopting level 4 maturity practices without supporting practices at the same level of maturity, a reasonable direction would be to adopt a phased approach to SLM implementation. The strategy should be to internalize a mentality and organizational pre-disposition for developing and reporting upon customer-centric performance metrics (see section on Service Culture) in order to develop momentum to continue the effort - to provide a predictable, acceptable service to the customers of the service by,

- Establishing a basic service catalogue describing the services of the IT provider. The catalogue should describe what services are offered, their costs, how to access them and the obligations of both the provider and the customer. Develop a service chain associated with the delivery of services described in the Catalog

- Leveraging the Service Request (SR) process as the vehicle to market and order services,

- Establishing Operational Level Agreements amongst service delivery partners in service chains,

- Instituting measurement reporting for processes which have direct and easily collectable metrics (excluding difficult and/or expensive availability and capacity metrics),

- Preparing standard OLA and SLA templates,

- Marketing a model of SLM excellence by establishing a locally focused SLA to corporate service organizations

![[To top of Page]](../images/up.gif)

Customers and Service Users

In the retail industry customers are usually the end user or consumer of the product - the relationship between payment and consumption is direct. However, in most IT environments this is not the case and the interests of end users is not always the same as business managers who fund IT services, typically through a fixed budget, overhead allocation, direct chargeback, or some combination of these methods. They are most interested in balancing the benefits of the IT services provided with their costs. They will often accept a less than optimal service experience in order to fund other programs - ie. receiving incrementally better IT service is not worth the price.

| "IT departments don't really have customers; they have clients. The dictionary definition of customer is "one who purchases a commodity or service." People striving for customer satisfaction tend to think of a customer as someone who's involved in a transaction, someone standing in a checkout line making a discrete purchase.

But IT customers, even if they are paying ones, aren't involved in a short-term deal. They're involved in a long-term relationship with a group of highly skilled professionals. They're really clients, which the dictionary defines as "people who engage the professional advice or services of others." And the dynamics of a professional partnership are quite different from those of a commodity transaction. " Paul Glen, Satisfying IT Customers May Be a Bad Idea, June 28, 2006, HDI article

|

Hence, services are funded at "good enough" levels.

Or consider how how Microsoft defines customers and client when outlining Microsoft Solution Framework.

| "the customer is the person or organization that

commissions the project, provides funding, and who expects to get business value from

the solution. Users are the people who interact with the solution in their work. For

example, a team is building a corporate expense reporting system that allows employees

to submit their expense reports using the company intranet. The users are the

employees, while the customer is a member of management charged with establishing

the new system." MSF Process Model v. 3.1, White Paper, June 2002, p. 8, at MS Technet

|

All too often the rationale for funding at a particular level is not properly communicated to the organization or end users. As a result, the consumers of the service - the end users - will expect service perfection. They do not understand the performance constraints placed on the service provider by business leaders making funding decisions. These leaders often fail to make the connection between funding and performance constraints and the end users develop the perception that the internal provider is delivering low value rather than agreed-upon service according to business requirements.

R

.

![[To top of Page]](../images/up.gif)

Classes of Service

| Why then do educated, savvy business professionals, well aware of the laws of economics and fully willing in their private lives to pay for the level of service they desire, expect that these principles do not exist for corporate IT services? For some reason, a myth has grown up in many companies that unlimited funds are available for IT and that people can always ask for "more" and "better" without having to pay for the additional costs.

Mark D Lutcheon, managing IT as a business, John Wiley & Sons, 2004, ISBN: 0-471-47104-6, p.134

|

By always trying to please everyone and minimize complaints, IT organizations are, perhaps, partly to blame for the above misconception. However, as Lutcheon comments - "the days of the IT free ride are over"

R. Simply put, most IT Providers have learned the lessons resulting from continuing, escalating user demands without compensating funding.

The table below illustrates how different drivers work together to increase the costs associated with service provision.

It is incumbent upon prudent IT leadership to make sure everyone in the company understands the impact of IT decisions on the total IT budget allocation. Typically, this is done by creating "classes" of use/service categories. These classes are usually driven by the complexity or volume of the service or use required and are identified are periodically verified by identifying and re-visiting service level requirements. There are three primary considerations when devising classes of service:

- To avoid undue confusion (thereby negating many of the gains achieved by offering service classes), the number of classes should be limited. Doing this facilitates standardization, cost efficiency and leveraging economies of scale.

- the speed in which the service is offered - how quickly can the service be delivered form conception to completion?

- the continuing availability of the service - how universal is the service to be online, at what performance levels and to which customer groups?

Business units that can live with "standard service levels" at "normal speeds" (eg., a mature business unit with well-developed processes and little change in its business model over time) may choose to pay the least per unit of volume or per head. Other business units, which may require customized or unique types of service and very fast delivery of these services at any time (eg., a fast growing business unit with a highly mobile workforce) may pay may additional amounts per volume or head count. For these latter business units, revenues must fully support their needs or to cover their costs, they must find increased investment funds or obtain a corporate subsidy.

This approach allows business units and corporate departments to share in basic, standard, commodity-type services at the same unit cost, the driver being either the number of users or the volume of use by class of use or service. Business units requiring higher levels of more specialized services and/or faster speed or greater availability can identify the premium services they want from the It organization "by the pound" in increments above the standard services.

![[To top of Page]](../images/up.gif)

Startup and Maintenance

Unlike many ITIL processes which have a lifecycle measured in days or weeks, the service management lifecycle can be measured in years. The task of negotiating SLAs is so extensive that its' review and re-negotiation may not be warranted even on an annual basis. Many IT organizations operate on a charge-back and break-even basis where the costs of providing services, while apportioned annually according to formulae, are not subject to intense annual review and operational assessment.

This lifecycle is academic until the process is implemented sufficiently well that it is subject to an initial iteration. The tasks in implementing a sustainable process have often proven insurmountable to organization's which will abandon SLM principles as being too time-consuming with unclear or unachievable benefits.

Service Level Management implementation should, therefore, be subject to Project Control with a Project Charter and detailed Work Breakdown Structure. That WBS should include tasks which:

- Appoint a Process Owner.

- Define a Service Catalogue initiative over several years of increasing comprehensiveness encompassing participant obligations within defined service chains, financial costs, performance objectives, etc. The initial release should an organizational framework for service descriptions and a method of requesting access to a service.

- Define service chains involved in the delivering of services. Once completed, each service relationship should be identified and subject to performance objectives between the IT provider's Senior Leadership Team (acting on behalf of the next actor in the service chain) and organizational divisions responsible for the delivery of activities in that service chain. These are collectively recorded in OLAs, and, where the provider is external to the organization, in Underpinning Contracts (UCs with contain stronger legal strictures).

- Establish an SLA-OLA architecture describing the network of agreements between the IT provider and its' customer base which best reflects:

- the off-loading of material to a comprehensive Service Catalogue which would normally be contained in an SLA/OLA,

- the removal of redundancy in service and objective reporting,

- the unique one-to-one (ie. Provider to a single or discrete number of business lines) service provision,

- Identifies performance metrics with initial concentration on workload and response time metrics which are easily obtainable from existing sources and which supplements this with survey measures of user satisfaction.

- Develops measures of availability and capacity and determine appropriate benchmarks based on similar industry usages,

- Develops strategies to implement monitoring tools to provide availability and capacity measurement on a regular basis with ultimate emphasis on developing end-to-end measurements.

![[To top of Page]](../images/up.gif)

Agreement Architecture

The instruments for enforcing Service Level Management within the organization is a series of agreements amongst IT and its direct and indirect Customers that define the parameters of system capacity, network performance, and overall response time required to meet business objectives. These contracts specify processes for measuring and reporting the quality of service provided by IT, and describing compensation due to the client if IT Service Provider misses the mark.reference

| "An IT organization that does not have Service Level Agreements in place sends a strong message to its customer community that it is able to provide any type of service, unconditionally, under any terms that the customer demands, at any time of the day ….. The most positive thing an IT service provider can tell his customers is: I understand your business needs and your business impact and these are the terms by which I shall do my job, to ensure that you can do yours.

Char LaBounty, Managing Partner, Renaissance Partners

|

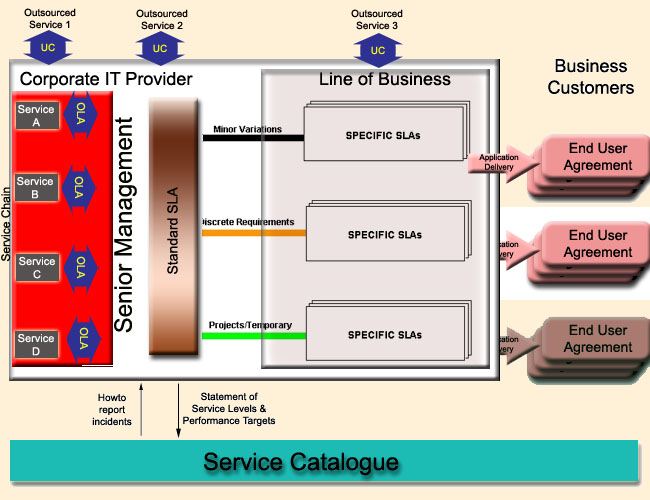

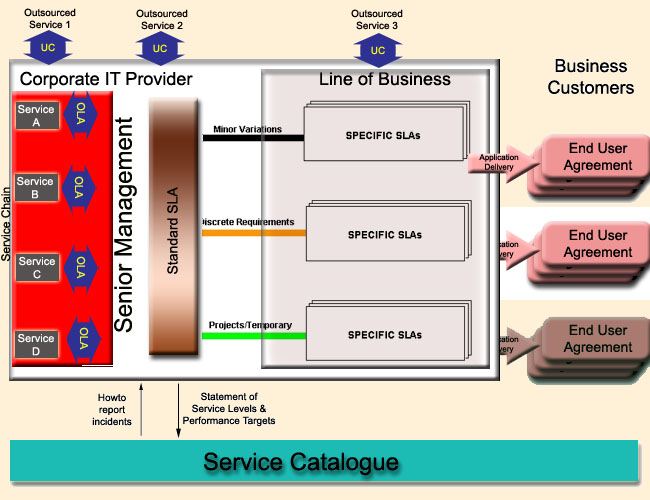

Many organizations will have only a few SLAs. They may are most likely product-focused and outline the delivery of a specific application to a specific Customer base. Given that each application has a unique set of customers this strategy often results in undue duplication amongst SLAs in use. The SLAs are administered by Line of Business technology providers which provide technology service for which the application is the major supported feature. A typical agreement architecture might look like that presented below.

This diagram highlights the following relationships and the agreement which is used to describe obligations and performance expectations. The description has the following organizational assumptions:

- this is a business-centric SLA architecture

- lines of business maintain control over business critical applications which rely upon centralized IT services (eg. network, support desk). If all application support is centralized within an Corporate IT Division, then End User Agreement might be between the Corporate IT Division and the End User.

- lines of business have centralized and/or decentralized business operations which utilize centralized IT services

- some services are outsourced. Outsourced services may be administered by the Corporate IT Division or by a line of business

Organizations should develop an agreement architecture as a preliminary framework for developing SLAs and SLM in general. The various agreements involved in this are:

| Agreement | Between | Content

|

| Service Catalogue | Corporate IT service providers describing their service offering | Types of service per cost, performance expectations, obligations of participants, including customer

|

| SLA | service Level Agreements: Corporate IT Provider and Line(s) of Business | one standard SLA itemizing standard services, specialized SLAs describing specific agreements or deviations from standard agreement. References service catalogue and itemizes deviations from standard offerings. contains signatures, length of agreement, how to amend agreements.

|

| OLA | Operation Level Agreements: IT Corporate Services and Corporate Senior Management | Obligations and performance expectations required to meet SLA and Service Catalogue service descriptions.

|

| UC | underpinning Contracts: IT Corporate Services or a line of business and external vendor providing service(s) | Obligations and performance expectations described in exacting legal terminology and containing penalty descriptions for breeches of performance

|

| EUA | End User Agreement: Between users of specific applications and the line of business (might be Corporate IT Services) responsible for delivering the application | Contains disclaimers, tools and offering restrictions, minimum access requirements, security obligations, etc

|

The ultimate service expression is described by an SLA between the LOB product provider and the Customer of the business' product. A major enabler of this relationship will be defined in the OLA between the primary corporate IT service provider and a LOB IT provider (the primary signatory of the SLA). This OLA will be a composite of all the service commitments inherent in the provision of services by corporate IT provider to the LOB IT provider. These obligations will, in turn, be expressed in OLAs between the corporate departments' offering services and the corporate IT provider's senior leadership acting as the focal point for the expression of commitments.

Services are expressions of benefits bundled in a way to best satisfy Customer wishes. This bundling happens many times and in different ways. A IT service provider department is a representation of this multiple bundling and, the way the organization is setup should render the multiple delivery of these services efficient and effective. This will most often follow technology product lines or workforce specializations. There is seldom an exact match between an organization and the services it delivers.

Each service will have one or more service objectives which establish targets to be met. When a defined service involves multiple departments there are "hand-off" in terms of the technologies and/or specializations involved. Each hand-off raises a commitment by one department to another to efficiently perform their obligations in the total service offering. Those commitments are expressed in Operational Level Agreements.

| OLAs need to serve as a benchmark any time Service Level Agreements need to flex to meet business requirements. If a specific service is required faster or differently by a business unit, the OLAs will show exactly which groups need to be consulted, and which services provided by those groups will ultimately affect the delivery of the desired service. If the providing group can agree to change how their service is delivered, then the SLA can be changed, and the OLA will be altered accordingly.

Implementing Service Level Management, White Paper, Char LaBounty

|

There are two perspectives on which to focus initial attention on - SLA or OLA/UC development. In mature organizations, customer requirements will dictate what levels of performance are required. SLOs will be the operational expression of these requirements and provide high level guidelines for how service providers in the service chain should perform in order to meet the SLO on a consistent basis.

However, many organizations which are implementing SLM will have some existing characterization of their services, though performance expectations may not be well formulated. Service execution will be established by the performance of the various service chain participants. Codifying these characteristics will be a useful organizational exercise and one that is more accomplishable because it avoids (for the time being) the more difficult task of ensuring performance is sensitive to end-customer specifications. Also, establishing workable service objectives based upon current practices and reporting on them will establish the value and integrity of the process before undertaking more serious, time-consuming and conflictual negotiation processes.

| Some organizations believe they can implement customer Service Level Agreements without first having established their own internal IS support Service Level Agreements. This can be uncomfortable for all participants if the entire IS organization is not closely aligned prior to beginning the process with your customers. In fact, it can be down right embarrassing!

Implementing Service Level Management, White Paper, Char LaBounty

|

Establishing OLAs for the many combinations of hand-offs which occur in service provisioning would be excessively complicated to administer on an ongoing basis. Instead, the relationships are accounted for in a single OLA between the unit and IS management. This simplifies the retention of these agreements and facilitates their comparison with the agreements which are used to describe the relationship with LOB technology partners and Corporate service customers.

Using the catalogue as an aid, SLM must plan the most appropriate SLA - OLA structure to ensure that all services and all Customers are covered in a manner best suited to the organization's needs. It is recommended that the architecture be adopted which establishes:

- A single Corporate SLA itemizing all services as detailed in the service catalogue for all direct Corporate Customers,

- A single corporate OLA between the primary IT service provider and individual LOB IT providers providing direct services to the organization's customers,

- Specific SLAs and OLAs with customers for unique services provided,

- Specific OLAs between the corporate IS senior leadership and departments for the composite provisioning of services described in services maintained in a service catalogue,

- Individual services described in service descriptions maintained in a Service Catalogue,

- SLAs and OLAs are maintained as Configuration Items in the CMDB and are subject to Change Management.

![[To top of Page]](../images/up.gif)

IT Service Catalogue

| “Service catalogs are the cornerstone of service delivery and automation, and the starting point for any company interested in saving money and improving relationships with the business.”

Forrester Research

|

| "The availability of a ‘Service Catalog’ describing the current and proposed IT service offerings is vital to the success of an IT Service Management strategy targeting service excellence. In the same way a restaurant menu sets initial expectations for a customer and provides the basis for personalized service, the Service Catalogue enables IT organizations to market and commit to achievable levels of service at a predictable cost (or planned price). A well designed and suitably worded catalogue will dramatically improve the business perception of the value of IT services and is a mandatory element for enterprises seeking the alignment of business requirements and IT service capabilities."

|

Or, this commentary from Centrata...

| ... "a service catalogue becomes a centerpiece within IT, through which business commitments, customer demands,

infrastructure performance and operational workflow all come together as a central point of real-time action,

as well as historical and business planning. Such a service catalogue would also promote standardization of

services and service components, for greater efficiency and flexibility, as well as reducing human error and

minimizing ad hoc requests.

The benefits of such a robust, if demanding, service catalog

approach are manifold. A service catalogue in this

evolved sense becomes the core of the IT intelligence

and “self awareness” as it coordinates provisioning,

delivery, assurance, accountability and optimization of

the infrastructure for a changing array of business

services. It can enable significant benefits in terms of

operational savings, infrastructure optimization, asset

investments based on use and performance, SLA costs

and requirements for outsourced services, and of

course promote significantly more positive customer

satisfaction, and ultimately, business impact and

revenue growth. The service catalogue can also help to

manage user behavior, when, for instance, individual

idiosyncracies are impacting business performance, as

well as validate and document business value."

THE SERVICE CATALOG: AN ESSENTIAL BUILDING BLOCK FOR ITIL

A White Paper Prepared for Centrata, December 2004, p. 4

|

A service is: 'One or more IT systems which enable a business process'.

The purpose of the Service Catalogue is to:

- Highlight the services offered and managed by the IT service provider - a marketing endevour intended to demonstrate the breadth and scope of the provider's operations,

- Provide customers with information on how to access an IT service, its' costs and the choices available - an element of good customer service.

- provide a prowerful vehicle to promote better alignment between IT services and the goals and objectives of the organization

| "By mapping IT services more explicitly to business needs, the IT organization can better understand how to add even more value. This aspect of the Service Catalog helps address three of the most emotional words in the IT vocabulary; 'IT-Business Alignment'." Rodrigo Fernando Flores, September 12, 2005, IT Service Catalog - Common Pitfalls, ITSMWatch

|

To avoid confusion, it may be a good idea to define a hierarchy of services within the Service Catalogue, by qualifying exactly what type of service is meant e.g. business service (that which is seen by the Customer), Infrastructure services, network service, application service (all invisible to the Customer - but essential to the delivery of Customer services).

The catalogue is maintained as part of the Configuration Management Database (CMDB). By defining each service as a Configuration Item (CI) and, where appropriate, relating these to form a service hierarchy, the IT provider will be able to relate events such as Incidents and RfCs to the services affected, thus providing the basis for service monitoring via an integrated tool (e.g. 'list or give the number of Incidents affecting this particular service').

The Service Catalogue can also be used for other Service Management purposes (e.g. for performing a Business Impact Analysis (BIA) as part of IT Service Continuity Planning, or as a starting place for Workload Management, part of Capacity Management). The cost and effort of producing the catalogue is therefore easily justifiable. If done in conjunction with prioritization of the BIA, then it is possible to ensure that the most important services are covered first.

At its' basic definitional level a service catalogue is a simple concept. Webster defines it as "a complete enumeration of items arranged systematically with descriptive details". There are three key elements in this definition:

- Complete - implies that the description of IT services should be exhaustive. However, it need to be complete only with reference to a stated purpose - expressed in terms of overall IT Service Management

- Systematic - "formulated as a coherent body of ideas or principles", the depiction of services should be based upon a consistent logic to achieve understanding of organizational goals and objectives,

- Descriptive details - the background material on the service, presented to sell or encourage usage by a Customer base.

A Service Catalogue should have easily-understandable descriptions and an intuitive interface for browsing service offerings. Effective Service Catalogs should also segment the customers they serve – whether

end users, business unit executives, or other IT managers – and may provide different content based on role

and needs.

The Service Catalogue should be transaction-based. A consumer viewing it should assume that if they see something they reqiure in the catalogue that it can be ordered directly. This direct relationship expedites processing and enlists the customers attention and ultimate usage of the catalogue.

Lastly, a Service Catalogue can provide a system of record for data that you need to manage the IT organization. The Service Catalogue can provide the

means to manage customer demand, map fulfillment processes for each service, track service

levels, drive process efficiencies, govern vendor performance, and determine costs. No service-oriented

business can run effectively without such operational and financial data readily and easily available.

R

![[To top of Page]](../images/up.gif)

Metrics

| "not everything that can be counted counts and not everything that counts can be counted."

Albert Einstein, quoted in Service Level Management for Enterprise Networks, Lundy Lewis, Artech House, 1999, ISBN: 1-58053-016-8, p.13

|

| IT metrics must measure and communicate IT performance in the context of the business value IT provides.

Mark D. Lutcheon, managing IT as a business, John Wiley & Sons, 2004, ISBN: 0-471-47106-6, p. 157

|

The Purpose of Measurement

According to Lutcheon

R, in order to "measure and communicate IT performance in the context of the business value IT provides" requires the organization to answer three primary questions:

- how is IT spending it's assigned appropriation

- is the company receiving agreed-on value for this appropriation?

- is IT assisting in meeting strategic and tactical business goals and objectives?

Because many parts of the organization are involved in answering these questions each requires different sets of metrics for their unique reasons. Lutcheon identifies different sets of users:

| IT Value Area/Metric & Examples

| Application and Use

|

| Enterprise | Business Unit Specific | Functional Area Specific

|

Alignment

- Governance and Leadership

- Business Management Liaison/SLAs

- Performance Measurement/Analysis/Reporting

|

| Attributable IT spending per direct employee (budget & actual)

|

|

Support

- Sourcing Management and Legal Contracts and Issues

- Organization and People Skills

- Marketing and Communications

- Finance and Budgeting

| Growth rate of total IT employees per growth rate of total employees (budget & actual)

|

|

|

Operations

- Service Delivery (Operations and Initiatives/Infrastructure)

- Enterprise Core System (Applications)

|

|

| Growth rate of attributable IT spending per growth rate of direct total spending (budget & actual)

|

Resiliency

- Security/Confidentiality/Privacy

- Data Management and Quality

- Business Continuity and Disaster Recovery

|

|

|

|

Leverage

- User Technology Competencies and Skills

|

|

|

|

| Futures

|

|

|

|

|

Mark D. Lutcheon, managing IT as a business, John Wiley & Sons, 2004, ISBN: 0-471-47106-6, p. 162

|

Understanding how IT provides business value requires categorizing IT investments. Lutcheon outlines the business value considerations, typical business value outcome and presents key metrics for each of these areas.

R.

| IT Investment category

| Value Characteristics | Value Outcomes | Value Metrics

|

Mission Critical

Technology investment to gain competitive advantage or to position the organization in the marketplace to increase market share or sales

| - competitive advantage

- competitive necessity

- market positioning

- innovative services

- increased sales

| - 50% fail

- some spectacular successes

- 2 to 3 yr lead time

- higher revenue / employee

| Business Value Financial:- revenue growth

- ROI

- ROA

- Revenue/employee

|

Business Critical

Technology investment for managing and controlling the organization at the business unit level

N

| - better information

- better integration

- improved quality

- increased sales

| - shorter time to market

- superior quality

- premium pricing

- improved control

| Business Unit Operational:- Time-new product to market

- Sales-new products

- Production/service quality

|

Management Control

Technology investment to process basic repetitive transactions of the company. Focus is on high-volume transactions and cost reduction

| - increased throughput

- cost reduction

| - 25% to 40% return

- higher ROI/ROA

- lower risk

- improved control

| Business Unit IT Application:- Time-application implementation

- Cost-application implementation

|

Infrastructure

Technology investment to construct foundation IT capability

N

| - standardization

- flexibility

- cost reduction

| - utility-type reliability

- support and facilitates change

- creates compatibility

| Enterprise-wide IT infrastructure:- infrastructure availability

- cost per transaction

- cost per user

|

Service Level Metrics

Services, as described and negotiated in Service Level Agreements are generally described by Lutcheon's "infrastructure" category. Service Level metrics measure the quality of services provided to customers, and are essential to the understanding and controlling of services by management. At the highest level (ie., end-to-end service measurement) they are focused on the customer. They are described in SLAs and are expressed in terms that the customer, as a non-technically-conversant person would understand, and they MUST measure things that are important to the Customer. They should have the following characteristics:

- Conceptually simple - ie. clearly defined and easily understood,

- Measurable - without excessive cost or effort,

- Valuable - provide the basis for decision-making and action including ROI analysis,

- Leverage organizational economies - ie. encourage functional behaviors,

- Consistent - the way the measurement and reporting is approached by anyone performing the measurement must be consistent,

R

- Agreed to by all parties.

Infrastructure availability, for example, is measured at the Users screen, not at some intermediate component. The challenge is in integrating all intermediate components into the end-to-end metric. The result, in all cases, is that a much higher availability is now required from each subcomponent (since individual component availabilities are multiplicative to the overall measure).

| "Its here that service providers and customers often find themselves with entirely different motivations in service performance measurement. The service provider wants to measure the quality of the network itself. Normally this would relate the measurement of a transit path, commencing when a packet enters the provider's network, and taking the measurement outcome as the packet leaves the provider's network. The customer, on the other hand has less of an interest in the performance of the network, and more of an interest in the performance of the application itself, spanning the entire path from the client to the server and back again. In the context of the Internet, such paths may transit a number of provider's networks, and its the cumulative picture rather than the profile of any individual network that is of interest to the customer. The service provider also measures different aspects of performance. As we've noted already, the service provider is interested in the per packet transit latency and the stability of the latency readings, the packet drop probability and the jitter profile. The end user has a somewhat different, and perhaps more fundamental set of interests: Will this voice over IP call have acceptable quality? How long should this download take?"

Just How Good are You? Measuring Network Performance

|

To meet this overall requirement requires the stating and operational readiness of the individual components in the service chain. These metrics are stated as Operational Level Objectives (OLOs) amongst internal service partners and in Underpinning Contracts (UCs) when the service is provided by an external providers (eg. ISP, ASP).

What Gets Measured

There are four broad parameters of primary interest to Customers which are used in evaluating service levels:

- Availability - percentage of time service is available for use

- Performance - the rate (or speed) at which work is performed

- Reliability - how often a service or device, or network goes down or how long it stays down

- Recoverability - the time required to restore the service following a failure

Other measures which support the IT Provider in obtaining resources to delivery services include workload volumes, service desk responsiveness, implementation times for configuration changes and new services, as well as overall customer satisfaction.

A recent study by EMC advances a slightly different view of performance. It advances Quality of Experience as a key concept in measurement - "Quality of Experience is the ultimate “quality” metric because it is directed at real customer/consumer experience versus an array of technical objectives that may be measurable, but which as an aggregate may often have little or nothing to do with actual business impactR".

EMA has defined the following key areas as useful in establishing QoE:

- Service availability – Availability needs to be understood first in terms of what it means to the end user experience, and then in terms of the infrastructure interdependencies that impact the availability of that service.

- Service responsiveness – Response time is primary in impacting the end user’s experience in interacting with an IT service. EMA research indicates that persistent degradation in responsiveness can be more disruptive to end-user experience than occasional or intermittent failures. This is because most users can work around short downtime issues and are more confident failures of availability will be fixed, than they are trusting that performance degradation will be corrected or even noticed.

- Service consistency – This is the consistency with which a service is available and responsive to the end customer. Consistency can be far more important that absolute averages that depend on huge swings in value. This is because most people work or perform communications-related and other application-related tasks in expected rhythms. When those rhythms are disrupted or unstable, the negative impact can be greater than it is when average delay times are slightly higher than ideal. For businesses with customer-facing applications, erratic responsiveness can be especially damaging in terms of customer loyalty and even brand position.

- Service appropriateness – IT should proactively determine, rather than take on face value, that the services it is delivering fit the customers they’re being delivered to. Customer Experience depends on delivering the right kinds of services at the right levels of quality to the right service consumers.

- Flexibility and mobility – Customer empowerment can increase satisfaction levels. These criteria really combine two notions – flexibility in terms of choosing types of application services and selecting from different quality levels; as well as mobility options for accessing information remotely – at home, or in the office, or while traveling.

- Security and compliance – These criteria are becoming increasingly visible requirements. More specifically, these criteria can include setting levels of guaranteed data security guaranteed for certain information, as well as enforcing and auditing appropriate end user access to critical IT services. They can also include metrics surrounding end user privacy and security in exchanging information

- Cost-effectiveness – Expense control is always important to customers, whether they’re business customers or individual consumersR.

How it Gets Collected

There are five primary methods by which service metric data gets captured:

- Monitoring all components used by application transactions and aggregating them to devise overall availability and performance measures,

- Inspecting network traffic to identify application transactions, which are then tracked to completion and measured for propagation delay,

- Using client agents that decode conversations to identify application transactions and measured client-perceived availability and responsiveness,

- Instrumenting the application code to define application transactions and collecting information on completed transactions and response times,

- Generating synthetic transactions at regular intervals and collecting availability and performance measures based on their tracking.R

Applications with response times recorded above a pre-defined threshold are considered unavailable. A combination of the above method usually provides the best overall result though considerations of cost, reliability and intrusiveness may preclude some approaches.

These collection techniques fall into one of two primary categories:

- Event-driven Measurement - the times at which certain event shappen are recorded and then desired statistics are compared by analyzing the data

- Sampling-based Measurement - taking a scheduled, periodic look at certain counters or information access points

Who Uses it

| The process of acquiring meaningful performance data is broken. We can report on "five nines" for all of our network components; we can dump database extracts into Excel and figure out what percentage of our calls related to a specific incident; we can dig into our ERP system and calculate transaction times. What we can't seem to do, however, is to pull these numbers together into business indicators that show whether or not we're successful: not successful in keeping all of our servers online, or successful in closing all of our open calls, but successful in terms of our company's vision and business objectives.

Char LaBounty, The Art of Service Management, p.5

|

Measured as the percentage of the time that a service is available for use, can be a controversial subject since it can involve a number of different measurement mechanisms. The variability of measurement is a result of differing perspectives of service goals, which vary primarily by the job function of the individual doing the measuring. For example, the network manager typically sees the service as network connectivity; the system manager views the service as the server remaining operational; the database administrator sees the service as available access to data held in the database. Hence, quoted availability usually relates to individual components and do not match the IT user's perception of availability. The end user or LOB wants to know that they can access the application and data required to perform their duties.

Measured as the percentage of the time that a service is available for use, can be a controversial subject since it can involve a number of different measurement mechanisms. The variability of measurement is a result of differing perspectives of service goals, which vary primarily by the job function of the individual doing the measuring. For example, the network manager typically sees the service as network connectivity; the system manager views the service as the server remaining operational; the database administrator sees the service as available access to data held in the database. Hence, quoted availability usually relates to individual components and do not match the IT user's perception of availability. The end user or LOB wants to know that they can access the application and data required to perform their duties.

The first step in measuring and managing service levels is to define each service and map out the service from end-to-end.

- Operating system service on hardware, presuming hardware availability. Most platform vendors that claim 99.9 percent uptime are referring to this.

- End-to-end database service, presuming operating system and hardware availability.

- Application service availability, including DBMS, operating system, and hardware availability.

- Session availability, including all lower-level layers.

- Application server divorced from the database. In this scenario, the business logic and connectivity to a data store are measured (and managed) independently of the database component. Note that a combination of (2) and (5) are essentially the same as service (4) to the user/client.

- A complete, end-to end measure, including the client and the network. While the notion of a service implies the network, it is included in this diagram to show that you can establish the measure of availability for the stack as a whole with or without the network. For Internet-based applications, separating the network is important, because service providers can rarely, if ever, definitively establish and sustain service levels across the public network. Moreover, when a user connects across the Internet, it's important to understand how much of the user experience is colored by the vagaries of the Internet, and how much is under the direct control of operational staff. Decomposition into services is the first step toward defining what availability is measured, and why. As will be seen, indicating end-user availability over time does not require every service component to be measured and tracked separately.

There are three primary methods for capturing type 6 - the end-to-end measurement:

- Capture information at the User's desktop using client agents to decode conversations - this involves loading every client machine with a small agent that non-intrusively watches events such as keystrokes or network events. The agent then attempts to detect the start and end of the transaction and measure the time between them. The agent then sends the measured data to a central place where broader analysis can occur.

- Instrumenting an application (using APIs) to identify transactions with markers that can be monitored - the instrumentation APIs define the start and end of a business transaction and capture the total end-to-end response time as users process their transactions. Industry standards such as ARM (see Glossary) for the APIs have yet to be solidified. Best employed in a situation in which a full revision and upgrade of an application is undertaken

- Generating sample transactions that simulate the activities of the user community and that can be monitored - using scripts and intelligent agents or tools to capture transactions and later playing them back against an application service, this approach allows a simulated response time to be measured. By using distributed server resources or placing dedicated workstations at desired locations to submit transactions for critical applications, a continuous sampling of response times by location can be captured and reported. The strength of this method is in its ability to provide the end-user experience using samples rather than having to collect large volumes of data across all transactions from all end users.

How It Should be stated

Traditionally availability is reported as a percentage of total time a system or component was available divided by the time it was supposed to be available (removing time set aside for maintenance operations and/or time the system is not required to be operational). This statistic favors the IT Provider because it tends to downplay the effects of service outage. If the goal is 99.6% available and the result is 99.0% then the Provider is only .6% off target which translates to (99.6-99.0)/99.0 = .61% variation from expected - on the surface a good result.

Stating the goal as percent Unavailability has a different impact. In this case the goal was .4% unavailability and the Provider had 1% unavailability. Here the percentage is (1.0-.4)/.4 = 150% variation from expected. An entirely different perspective ensues.

Even quoting unavailability does not provide the whole picture. From the Customer's perspective an outage of 2 hours may be more palatable than 6 outages of 20 minutes each (or it may not - depending on the application). The difference would certainly lead to the perception of greater instability in the second instance.

The purest and most useful measure is to relate unavailability to costs to the business. To capture this requires that the Service Desk accurately record the number of people affected and that this gets multiplied by an amount per unit time of outage. This latter number must be established ahead of time. Frequently, this cost may have been devised as part of a Business Impact Analysis (BIA) in developing Disaster Recovery Plans (DRPs) for major applications.

![[To top of Page]](../images/up.gif)

Service Culture

| "It is important that all levels within the organization understand the value of implementing a Service Level Management culture. Without this commitment throughout the organization, it will be difficult for the line staff to understand it, and want to participate in it."

Management Alert: The Sarbanes-Oxley Act Will Affect Your Enterprise, by Gartner Research, April 2, 2003

|

The impact of culture on an organization is easily underestimated. Yet, to a great extent it influences the way employees function within the organization. Employees' attitudes toward leadership, for example, have a large impact on the effective style of that leadership within an organization. And, the primary businesses engaged in will establish many elements of how people interact with each other, achieve recognition and reward.

The organizational culture also determines how people react to change and thus how successful a certain change strategy will be. To cope with cultural differences when implementing processes, change moderators should adopt a leadership style, which is most effective depending on the situation they face.

Cultural elements to be taken into account when implementing or improving processes in an organization:

- attitude towards leadership

- hierarchical or flat/informal structures

- communication: formal or informal

- sensitivity towards change: open vs. reserved

- position: extrovert vs. introvert.

These items are the mechanisms of organizational culture. However there are other, more ephemeral aspects of culture in the way people interact with each other, and with customers - and, many staff both are served by, and provide service to, someone else. Almost all organizations understand the value of customer service: after all, without customers there is no service - no service, no performance, no work, no jobs. However, what is less clear is the value of servicing those who provide service to paying customers - ie. those who provide indirect or "support" services. To most enterprises all of IT is usually in this class (although e-business has the tendency to alter this relationship as end customers increasingly interact directly with the technology). These are the service chains which comprise "end-to-end" service provision. Each interaction or "hand-off" needs to be treated as if it was the end customer that was the recipient of the service.

| "I'm sure many of you have heard that internal service quality drives external service quality. I like to believe that that means if we treat each other internally like customers, those individuals in your organizations that service the company's customers will do a better job."

Char LaBounty, Service Level Management - The Value of Establishing Baselines

|

Every time customers do business with the IT provider, they are, without fully realizing it, scoring the organization on how well it is doing, not only at giving them what they want (hopefully established in agreements), but at fulfilling six basic customer needs:

- Friendliness: The most basic of all customer needs, friendliness is usually associated with being greeted politely and courteously,

- Understanding and empathy: The most basic of all customer needs, and it's usually associated with being greeted politely and courteously,

- Fairness: The need to be treated fairly is high up on most customers' list of needs. This will translate into consistency and is enabled through the application of consistent, stated methods and procedures,

- Control: Control represents the Customers need to feel as if they have an impact on the way things turn out,

- Options and alternatives: Customers need to feel that other avenues are available to getting what they want accomplished,

- Information: Customers need to be educated and informed about the products, policies, and procedures they encounter when dealing with your company.

![[To top of Page]](../images/up.gif)

Communications and Marketing

| "Marketing is essentially the process of understanding, anticipating, shaping, and satisfying the customer's perception of value. For an IT organization, marketing entails knowing the primary internal markets; enterprise executives, operation and line managers, work-area managers, and production end users investigating and predicting their requirements, developing feedback mechanisms for performance, and articulating service relevant to the interval group's ideas of value."

D Turick, Marketing the IS Organization: Questions and Answers, Gartner Group Research Note, Sept 26, 1996 - quoted in Tardugno, Dipasquale, Matthews, ITServices, Costs, Metrics, Benchamrking & Marketing, Prentice-Hall, 2000, ISBN: 0-13-019195-7, p. 48

|

Central to any marketing activities is the service catalogue - 'A "menu" of services - their description, deliverables, availability. limitations, price, and method of accessing them - should be the basis of all marketing communications.' There should be regular communications, using an appropriate mix of 'push' and 'pull' approaches and using existing internet delivery sources, describing changes and introductions and changes should be described as they occur.

Instillation of a service culture is dependent on the communication of performance and communications is the life blood of the organization and fuel for processes. It should flow horizontally, elegantly and spontaneously to support service objectives - "Communication lubricates change; it won't guarantee success - it will guarantee failure."Reference

Communications and Marketing Roles

Account representatives are the primary overseer of the flow of information between the IS department and Customers - both corporate services and Lines of business IT departments. They do not deal directly with end-users (these is the responsibility of the respective LOBs), but are responsible for ensuring that direct customers (as determined by their represented business portfolios remain fully informed about the services available to them.

The Service Desk plays primarily a reactive role. As the Fist Point of Contact (FPOC) for inquiries about services they must be prepared to direct Users to source information and answer service-related questions.

Management assures that communications channels remain open and 'lubricated' for easy access. They will frequently have to intervene when the news to be disseminated is less than positive or popular.

Operations needs to ensure that user satisfaction expressions with their services is received, acted upon and that the remedial actions are communicated back to Customers.

Communicating Performance

How IT service will be measured should be communicated to everyone in the company. The ISA must understand, for example, that "waiting on hold" is one of the things customers like the least. And the "average wait time" for a customer call will be clearly communicated and displayed to all account representatives. It will be up to them to quickly pick up the next call as their previous call finishes. Customers also dislike callbacks or multiple phone calls to resolve a problem. Therefore the "percentage of first-call resolutions" also will be measured, as a group and individually. Periodically taking a customer satisfaction survey and communicating results to all employees will be important as well.

![[To top of Page]](../images/up.gif)

SLM Process Owner

- primary source of management information on the Service Level Management (SLM) process,

- manages relationships between customers and IS department,

- provision and operation of tools to support performance reporting on an ongoing basis,

- ensure customer satisfaction with process,

- ensure that business and stakeholders are involved in collecting business requirements for service level determination.

Service Level Manager

- coordinate establishment, maintenance and review of SLAs, OLAs, UCs,

- CI owner of SLAs, OLAs and UCs,

- Define and negotiate the level of user services for specific customers, documenting them in SLAs,

- Determine fit of standard services (as defined in Service Catalogue) based on customer's service level requirements, and design custom service as necessary,

- Monitor, report and review on the achievement of Service and Operational level objectives,

- Initiate actions (eg. RfC, Service Improvement Plan [SIP]) to overcome problems identified in meeting service levels,

Customer Account Representative

- Primary interface between IT provider and lines of business,

- Ensure customer requirements are captured and communicated to IS department senior management,

- Foster the business relationship between IT and lines of business,

- Provide point of escalation for issues involving customer communications and user service disaffection,

- Advise LOB customers on IT provider policies, organization and strategies,

- Mediate disputes between IT provider and lines of business,

- Meet with customers to review and update SLAs and OLAs on the business of new and/or revised business needs,

- Advise on the impact of releases, changes and incident on service commitments.

Service Planning

- design new services,

- assist in establishment of services' funding levels.

Financial Management

- maintain costing formulae related to service charge-backs,

- allocate costs to IT provider organizational units,

- provide estimates of costs for fiscal year budgeting,

- provide service cost estimating advice.

Operations Management

- Configuration Owners for services listed in the Service Catalogue,

- assist in describing the service chains associated with service provisioning,,

- assess levels at which services can be provided for different funding and performance scenarios,

- meeting OLOs and explaining variances from targets

.

Senior Leadership Team

- approve new services for introduction in IS department,

- make decisions on the provision of services at specified levels,

- review and recommend Improvement Initiatives,

- review Balanced Scorecard information describing operational performance,

- act as the final point of escalation for service complaints.

![[To top of Page]](../images/up.gif)

Key Goal and Performance Indicators

A Key Goal Indicator, representing the process goal, is a measure of "what" has to be accomplished. It is a measurable indicator of the process achieving its goals, often defined as a target to achieve.

By comparison, a Key Performance Indicator is a measure of "how well" the process is performing. They will often be a measure of a Critical Success Factor and, when monitored and acted upon, will identify opportunities for the improvement of the process. These improvements should positively influence the outcome and, as such, Key Performance Indicators have a cause-effect relationship with the Key Goal Indicators of the process.

Key measurable elements of a service include :