Service Operations

4. Service Operation Processes

4.1 Event Management

An event can be defined as any detectable or discernible occurrence that has significance for the management of the IT Infrastructure or the delivery of IT service and evaluation of the impact a deviation might cause to the services. Events are typically notifications created by an IT service, Configuration Item (CI) or monitoring tool.

Effective Service Operation is dependent on knowing the status of the infrastructure and detecting any deviation from normal or expected operation. This is provided by good monitoring and control systems, which are based on two types of tools:

- active monitoring tools that poll key CIs to determine their status and availability. Any exceptions will generate an alert that needs to be communicated to the appropriate tool or team for action

- passive monitoring tools that detect and correlate operational alerts or communications generated by CIs.

4.1.1 Purpose, Goals and Objectives

The ability to detect events, make sense of them and determine the appropriate control action is provided by Event Management. Event Management is therefore the basis for Operational Monitoring and Control (see Appendix B).

In addition, if these events are programmed to communicate operational information as well as warnings and exceptions, they can be used as a basis for automating many routine Operations Management activities, for example executing scripts on remote devices, or submitting jobs for processing, or even dynamically balancing the demand for a service across multiple devices to enhance performance.

Event Management therefore provides the entry point for the execution of many Service Operation processes and activities. In addition, it provides a way of comparing actual performance and behaviour against design standards and SLAs. As such, Event Management also provides a basis for Service Assurance and Reporting; and Service Improvement. This is covered in detail in the Continual Service Improvement publication.

4.1.2 Scope

Event Management can be applied to any aspect of Service Management that needs to be controlled and which can be automated. These include:

- Configuration Items:

- Some CIs will be included because they need to stay in a constant state (e.g. a switch on a network needs to stay on and Event Management tools confirm this by monitoring responses to 'pings').

- Some CIs will be included because their status needs to change frequently and Event Management can be used to automate this and update the CMS (e.g. the updating of a file server).

- Environmental conditions (e.g. fire and smoke detection)

- Software licence monitoring for usage to ensure optimum/legal licence utilization and allocation

- Security (e.g. intrusion detection)

- Normal activity (e.g. tracking the use of an application or the performance of a server).

The difference between monitoring and Event Management

These two areas are very closely related, but slightly different in nature. Event Management is focused on generating and detecting meaningful notifications about the status of the IT Infrastructure and services.

While it is true that monitoring is required to detect and track these notifications, monitoring is broader than Event Management. For example, monitoring tools will check the status of a device to ensure that it is operating within acceptable limits, even if that device is not generating events.

Put more simply, Event Management works with occurrences that are specifically generated to be monitored. Monitoring tracks these occurrences, but it will also actively seek out conditions that do not generate events.

|

4.1.3 Value To Business

Event Management's value to the business is generally indirect; however, it is possible to determine the basis for its value as follows:

- Event Management provides mechanisms for early detection of incidents. In many cases it is possible for the incident to be detected and assigned to the appropriate group for action before any actual service outage occurs.

- Event Management makes it possible for some types of automated activity to be monitored by exception - thus removing the need for expensive and resource intensive real-time monitoring, while reducing downtime.

- When integrated into other Service Management processes (such as, for example, Availability or Capacity Management), Event Management can signal status changes or exceptions that allow the appropriate person or team to perform early response, thus improving the performance of the process. This, in turn, will allow the business to benefit from more effective and more efficient Service Management overall.

- Event Management provides a basis for automated operations, thus increasing efficiencies and allowing expensive human resources to be used for more innovative work, such as designing new or improved functionality or defining new ways in which the business can exploit technology for increased competitive advantage.

4.1.4 Policies, Principles and Basic Concepts

- Events that signify regular operation:

- notification that a scheduled workload has completed

- a user has logged in to use an application

- an e-mail has reached its intended recipient.

- Events that signify an exception:

- a user attempts to log on to an application with the incorrect password

- an unusual situation has occurred in a business process that may indicate an exception requiring further business investigation (e.g. a web page alert indicates that a payment authorization site is unavailable - impacting financial approval of business transactions)

- a device's CPU is above the acceptable utilization rate

- a PC scan reveals the installation of unauthorized software.

- Events that signify unusual, but not exceptional, operation. These are an indication that the situation may require closer monitoring. In some cases the condition will resolve itself, for example in the case of an unusual combination of workloads - as they are completed, normal operation is restored. In other cases, operator intervention may be required if the situation is repeated or if it continues for too long. These rules or policies are defined in the Monitoring and Control Objectives for that device or service. Examples of this type of event are:

- A server's memory utilization reaches within 5% of its highest acceptable performance level

- The completion time of a transaction is 10% longer than normal.

Two things are significant about the above examples:

- Exactly what constitutes normal versus unusual operation, versus an exception? There is no definitive rule about this. For example, a manufacturer may provide that a benchmark of 75% memory utilization is optimal for application X. However, it is discovered that, under the specific conditions of our organization, response times begin to degrade above 70% utilization. The next section will explore how these figures are determined.

- Each relies on the sending and receipt of a message of some type. These are generally referred to as Event notifications and they don't just happen. The next paragraphs will explore exactly how events are defined, generated and captured.

4.1.5 Process Activities, Methods And Techniques

|

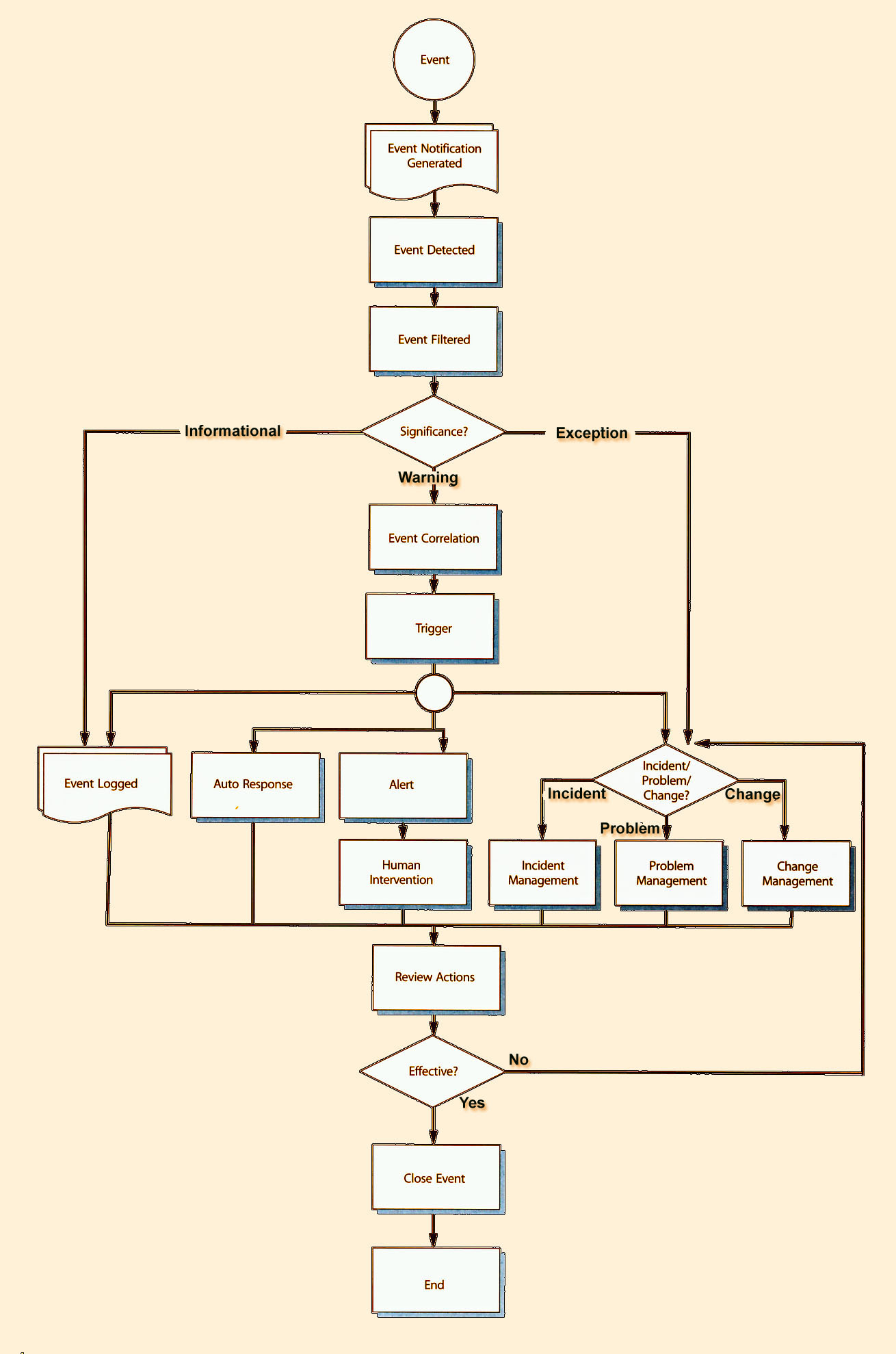

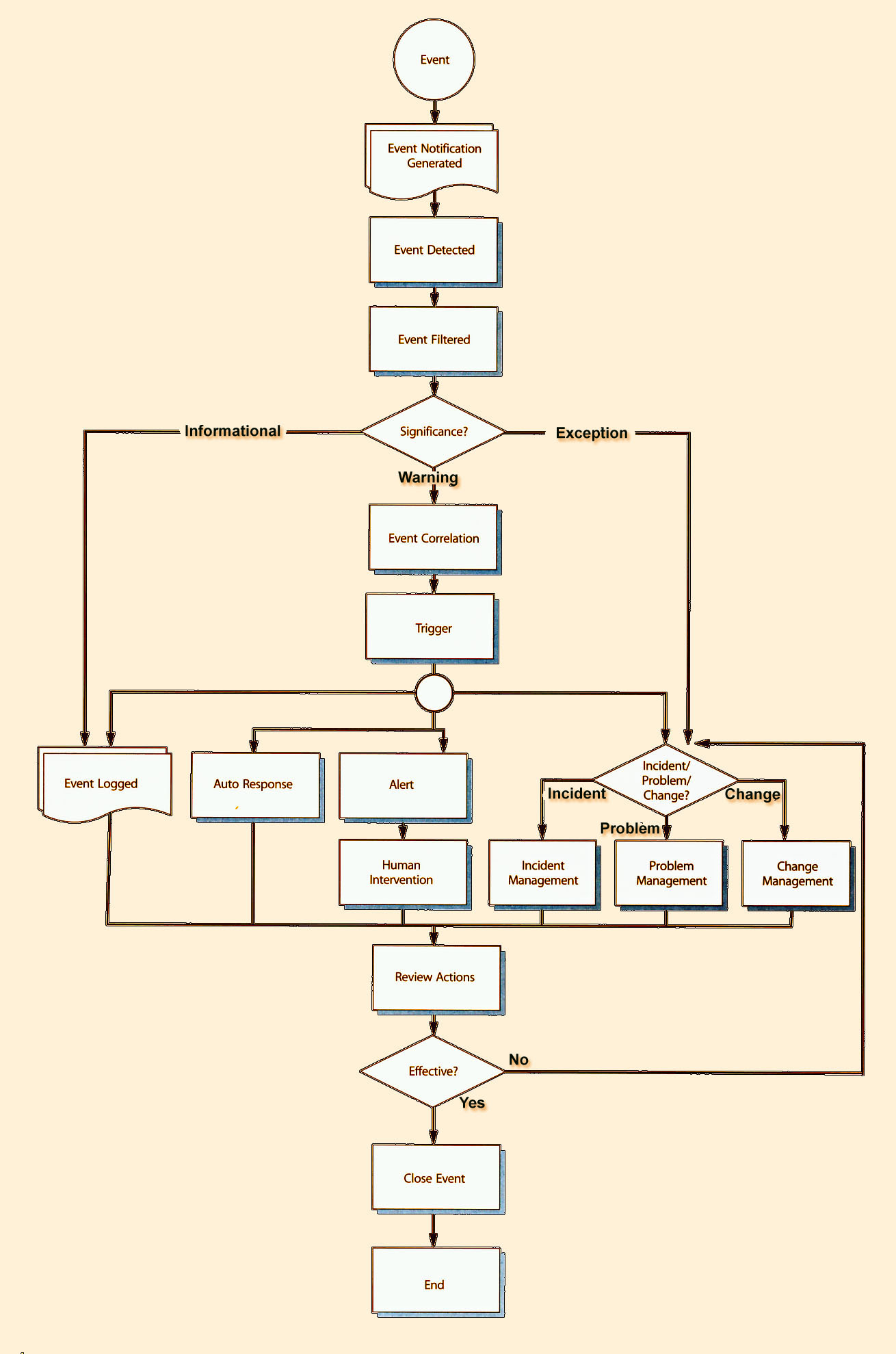

| Figure 4.1 The Event Management process |

Figure 4.1 is a high-level and generic representation of Event Management. It should be used as a reference and definition point, rather than an actual Event Management flowchart. Each activity in this process is described below.

4.1.5.1 Event Occurs

Events occur continuously, but not all of them are detected or registered. It is therefore important that everybody involved in designing, developing, managing and supporting IT services and the IT Infrastructure that they run on understands what types of event need to be detected.R

4.1.5.2 Event Notification

Most CIs are designed to communicate certain information about themselves in one of two ways:

- A device is interrogated by a management tool, which collects certain targeted data. This is often referred to as polling.

- The CI generates a notification when certain conditions are met. The ability to produce these notifications has to be designed and built into the CI, for example a programming hook inserted into an application.

Event notifications can be proprietary, in which case only the manufacturer's management tools can be used to detect events. Most CIs, however, generate Event notifications using an open standard such as SNMP (Simple Network Management Protocol).

Many CIs are configured to generate a standard set of events, based on the designer's experience of what is required to operate the CI, with the ability to generate additional types of event by 'turning on' the relevant event generation mechanism. For other CI types, some form of 'agent' software will have to be installed in order to initiate the monitoring. Often this monitoring feature is free, but sometimes there is a cost to the licensing of the tool.

In an ideal world, the Service Design process should define which events need to be generated and then specify how this can be done for each type of CI. During Service Transition, the event generation options would be set and tested.

In many organizations, however, defining which events to generate is done by trial and error. System managers use the standard set of events as a starting point and then tune the CI over time, to include or exclude events as required. The problem with this approach is that it only takes into account the immediate needs of the staff managing the device and does not facilitate good planning or improvement. In addition, it makes it very difficult to monitor and manage the service over all devices and staff. One approach to combating this problem is to review the set of events as part of continual improvement activities.

A general principle of Event notification is that the more meaningful the data it contains and the more targeted the audience, the easier it is to make decisions about the event. Operators are often confronted by coded error messages and have no idea how to respond to them or what to do with them. Meaningful notification data and clearly defined roles and responsibilities need to be articulated and documented during Service Design and Service Transition (see also paragraph 4.1.10.1 on 'Instrumentation'). If roles and responsibilities are not clearly defined, in a wide alert, no one knows who is doing what and this can lead to things being missed or duplicated efforts.

4.1.5.3 Event Detection

Once an Event notification has been generated, it will be detected by an agent running on the same system, or transmitted directly to a management tool specifically designed to read and interpret the meaning of the event.

4.1.5.4 Event Filtering

The purpose of filtering is to decide whether to communicate the event to a management tool or to ignore it. If ignored, the event will usually be recorded in a log file on the device, but no further action will be taken.

The reason for filtering is that it is not always possible to turn Event notification off, even though a decision has been made that it is not necessary to generate that type of event. It may also be decided that only the first in a series of repeated Event notifications will be transmitted.

During the filtering step, the first level of correlation is performed, i.e. the determination of whether the event is informational, a warning, or an exception (see next step). This correlation is usually done by an agent that resides on the CI or on a server to which the CI is connected.

The filtering step is not always necessary. For some CIs, every event is significant and moves directly into a management tool's correlation engine, even if it is duplicated. Also, it may have been possible to turn off all unwanted Event notifications.

4.1.5.5 Significance of Events

Every organization will have its own categorization of the significance of an event, but it is suggested that at least these three broad categories be represented:

- Informational: This refers to an event that does not require any action and does not represent an exception. They are typically stored in the system or service log files and kept for a predetermined period. Informational events are typically used to check on the status of a device or service, or to confirm the successful completion of an activity. Informational events can also be used to generate statistics (such as the number of users logged on to an application during a certain period) and as input into investigations (such as which jobs completed successfully before the transaction processing queue hung). Examples of informational events include:

- A user logs onto an application

- A job in the batch queue completes successfully

- A device has come online

- A transaction is completed successfully.

- Warning: A warning is an event that is generated when a service or device is approaching a threshold. Warnings are intended to notify the appropriate person, process or tool so that the situation can be checked and the appropriate action taken to prevent an exception. Warnings are not typically raised for a device failure. Although there is some debate about whether the failure of a redundant device is a warning or an exception (since the service is still available). A good rule is that every failure should be treated as an exception, since the risk of an incident impacting the business is much greater. Examples of warnings are:

- Memory utilization on a server is currently at 65% and increasing. If it reaches 75%, response times will be unacceptably long and the OLA for that department will be breached.

- The collision rate on a network has increased by 15% over the past hour.

- Exception: An exception means that a service or device is currently operating abnormally (however that has been defined). Typically, this means that an OLA and SLA have been breached and the business is being impacted. Exceptions could represent a total failure, impaired functionality or degraded performance. Please note, though, that an exception does not always represent an incident. For example, an exception could be generated when an unauthorized device is discovered on the network. This can be managed by using either an Incident Record or a Request for Change (or even both), depending on the organization's Incident and Change Management policies. Examples of exceptions include:

- A server is down

- Response time of a standard transaction across the network has slowed to more than 15 seconds

- More than 150 users have logged on to the General Ledger application concurrently

- A segment of the network is not responding to routine requests.

4.1.5.6 Event Correlation

If an event is significant, a decision has to be made about exactly what the significance is and what actions need to be taken to deal with it. It is here that the meaning of the event is determined.

Correlation is normally done by a 'Correlation Engine', usually part of a management tool that compares the event with a set of criteria and rules in a prescribed order. These criteria are often called Business Rules, although they are generally fairly technical. The idea is that the event may represent some impact on the business and the rules can be used to determine the level and type of business impact.

A Correlation Engine is programmed according to the performance standards created during Service Design and any additional guidance specific to the operating environment.

Examples of what Correlation Engines will take into account include:

- Number of similar events (e.g. this is the third time that the same user has logged in with the incorrect password, a business application reports that there has been an unusual pattern of usage of a mobile telephone that could indicate that the device has been lost or stolen)

- Number of CIs generating similar events

- Whether a specific action is associated with the code or data in the event

- Whether the event represents an exception

- A comparison of utilization information in the event with a maximum or minimum standard (e.g. has the device exceeded a threshold?)

- Whether additional data is required to investigate the event further, and possibly even a collection of that data by polling another system or database

- Categorization of the event

- Assigning a priority level to the event.

4.1.5.7 Trigger

If the correlation activity recognizes an event, a response will be required. The mechanism used to initiate that response is called a trigger.

There are many different types of triggers, each designed specifically for the task it has to initiate. Some examples include:

- Incident Triggers that generate a record in the Incident Management system, thus initiating the Incident Management process

- Change Triggers that generate a Request for Change (RFC), thus initiating the Change Management process

- A trigger resulting from a approved RFC that has been implemented but caused the event, or from an unauthorised change that has been detected - in either case this will be referred to Change Management for investigation

- Scripts that execute specific actions, such as submitting batch jobs or rebooting a device

- Paging systems that will notify a person or team of

- the event by mobile phone

- Database triggers that restrict access of a user to specific records or fields, or that create or delete entries in the database.

4.1.5.8 Response Selection

At this point in the process, there are a number of response options available. It is important to note that the response options can be chosen in any combination. For example, it may be necessary to preserve the log entry for future reference, but at the same time escalate the event to an Operations Management staff member for action.

The options in the flowchart are examples. Different organizations will have different options, and they are sure to be more detailed. For example, there will be a range of auto responses for each different technology. The process of determining which one is appropriate and how to execute it are not represented in this flowchart. Some of the options available are:

- Event logged: Regardless of what activity is performed, it is a good idea to have a record of the event and any subsequent actions. The event can be logged as an Event Record in the Event Management tool, or it can simply be left as an entry in the system log of the device or application that generated the event. If this is the case, though, there needs to be a standing order for the appropriate Operations Management staff to check the logs on a regular basis and clear instructions about how to use each log. It should also be remembered that the event information in the logs may not be meaningful until an incident occurs; and where the Technical Management staff use the logs to investigate where the incident originated. This means that the Event Management procedures for each system or team need to define standards about how long events are kept in the logs before being archived and deleted.

- Auto response: Some events are understood well enough that the appropriate response has already been defined and automated. This is normally as a result of good design or of previous experience (usually Problem Management). The trigger will initiate the action and then evaluate whether it was completed successfully. If not, an Incident or Problem Record will be created. Examples of auto responses include:

- Rebooting a device

- Restarting a service

- Submitting a job into batch

- Changing a parameter on a device

- Locking a device or application to protect it against unauthorized access.R

- Alert and human intervention: If the event requires human intervention, it will need to be escalated. The purpose of the alert is to ensure that the person with the skills appropriate to deal with the event is notified. The alert will contain all the information necessary for that person to determine the appropriate action - including reference to any documentation required (e.g. user manuals). It is important to note that this is not necessarily the same as the functional escalation of an incident, where the emphasis is on restoring service within an agreed time (which may require a variety of activities). The alert requires a person, or team, to perform a specific action, possibly on a specific device and possibly at a specific time, e.g. changing a toner cartridge in a printer when the level is low.

- Incident, problem or change? Some events will represent a situation where the appropriate response will need to be handled through the Incident, Problem or Change Management process. These are discussed below, but it is important to note that a single incident may initiate any one or a combination of these three processes - for example, a non-critical server failure is logged as an incident, but as there is no workaround, a Problem Record is created to determine the root cause and resolution and an RFC is logged to relocate the workload onto an alternative server while the problem is resolved.

- Open an RFC: There are two places in the Event Management process where an RFC can be created:

- When an exception occurs: For example, a scan of a network segment reveals that two new devices have been added without the necessary authorization. A way of dealing with this situation is to open an RFC, which can be used as a vehicle for the Change Management process to deal with the exception (as an alternative to the more conventional approach of opening an incident that would be routed via the Service Desk to Change Management). Investigation by Change Management is appropriate here since unauthorized changes imply that the Change Management process was not effective.

- Correlation identifies that a change is needed: In this case the event correlation activity determines that the appropriate response to an event is for something to be changed. For example, a performance threshold has been reached and a parameter on a major server needs to be tuned. How does the correlation activity determine this? It was programmed to do so either in the Service Design process or because this has happened before and Problem Management or Operations Management updated the Correlation Engine to take this action.

- Open an Incident Record: As with an RFC, an incident can be generated immediately when an exception is detected, or when the Correlation Engine determines that a specific type or combination of events represents an incident. When an Incident Record is opened, as much information as possible should be included - with links to the events concerned and if possible a completed diagnostic script.

- Open or link to a Problem Record: It is rare for a Problem Record to be opened without related incidents (for example as a result of a Service Failure Analysis (see Service Design publication) or maturity assessment, or because of a high number of retry network errors, even though a failure has not yet occurred). In most cases this step refers to linking an incident to an existing Problem Record. This will assist the Problem Management teams to reassess the severity and impact of the problem, and may result in a changed priority to an outstanding problem. However, it is possible, with some of the more sophisticated tools, to evaluate the impact of the incidents and also to raise a Problem Record automatically, where this is warranted, to allow root cause analysis to commence immediately.

Special types of incident: In some cases an event will indicate an exception that does not directly impact any IT service, for example, a redundant air conditioning unit fails, or unauthorized entry to a data centre. Guidelines for these events are as follows:

- An incident should be logged using an Incident

Model that is appropriate for that type of

exception, e.g. an Operations Incident or Security

Incident (see paragraph 4.2.4.2 for more details of

Incident Models).

- The incident should be escalated to the group that

manages that type of incident.

- As there is no outage, the Incident Model used

should reflect that this was an operational issue

rather than a service issue. The statistics would not

normally be reported to customers or users, unless

they can be used to demonstrate that the money

invested in redundancy was a good investment.

- These incidents should not be used to calculate

downtime, and can in fact be used to demonstrate

how proactive IT has been in making services

available.

4.1.5.9 Review Actions

With thousands of events being generated every day, it is not possible formally to review every individual event. However, it is important to check that any significant events or exceptions have been handled appropriately, or to track trends or counts of event types, etc. In many cases this can be done automatically, for example polling a server that had been rebooted using an automated script to see that it is functioning correctly.

In the cases where events have initiated an incident, problem and/or change, the Action Review should not duplicate any reviews that have been done as part of

.those processes. Rather, the intention is to ensure that the handover between the Event Management process and other processes took place as designed and that the expected action did indeed take place. This will ensure that incidents, problems or changes originating within Operations Management do not get lost between the teams or departments.

The Review will also be used as input into continual improvement and the evaluation and audit of the Event Management process.

4.1.5.10 CIose Event

Some events will remain open until a certain action takes place, for example an event that is linked to an open incident. However, most events are not 'opened' or 'closed'.

Informational events are simply logged and then used as input to other processes, such as Backup and Storage Management. Auto-response events will typically be closed by the generation of a second event. For example, a device generates an event and is rebooted through auto response - as soon as that device is successfully back online, it generates an event that effectively closes the loop and clears the first event.

It is sometimes very difficult to relate the open event and the close notifications as they are in different formats. It is optimal that devices in the infrastructure produce 'open' and 'close' events in the same format and specify the change of status. This allows the correlation step in the process to easily match open and close notifications.

In the case of events that generated an incident, problem or change, these should be formally closed with a link to the appropriate record from the other process.

4.1.6 Triggers, Input And Output/interprocess Interfaces

Event Management can be initiated by any type of occurrence. The key is to define which of these occurrences is significant and which need to be acted upon. Triggers include:

- Exceptions to any level of CI performance defined in

the design specifications, OLAs or SOPs

- Exceptions to an automated procedure or process, e.g.

a routine change that has been assigned to a build

team has not been completed in time

- An exception within a business process that is being

monitored by Event Management

- The completion of an automated task or job

- A status change in a device or database record

- Access of an application or database by a user or automated procedure or job

- A situation where a device, database or application, etc. has reached a predefined threshold of performance.

Event Management can interface to any process that requires monitoring and control, especially those that do not require real-time monitoring, but which do require some form of intervention following an event or group of events. Examples of interfaces with other processes include:

- Interface with business applications and/or business processes to allow potentially significant business events to be detected and acted upon (e.g. a business application reports abnormal activity on a customer's account that may indicate some sort of fraud or security breach).

- The primary ITSM relationships are with Incident, Problem and Change Management. These interfaces are described in some detail in paragraph 4.1.5.8.

- Capacity and Availability Management are critical in defining what events are significant, what appropriate thresholds should be and how to respond to them. In return, Event Management will improve the performance and availability of services by responding to events when they occur and by reporting on actual events and patterns of events to determine (by comparison with SLA targets and KPIs) if there is some aspect of the infrastructure design or operation that can be improved.

- Configuration Management is able to use events to determine the current status of any CI in the infrastructure. Comparing events with the authorized baselines in the Configuration Management System (CMS) will help to determine whether there is unauthorized Change activity taking place in the organization (see Service Transition publication).

- Asset Management (covered in more detail in the Service Design and Transition publications) can use Event Management to determine the lifecycle status of assets. For example, an event could be generated to signal that a new asset has been successfully configured and is now operational.

- Events can be a rich source of information that can be processed for inclusion in Knowledge Management systems. For example, patterns of performance can be correlated with business activity and used as input into future design and strategy decisions.

- Event Management can play an important role in ensuring that potential impact on SLAs is detected early and any failures are rectified as soon as possible so that impact on service targets is minimized.

4.1.7 Information Management

Key information involved in Event Management includes the following:

- SNMP messages, which are a standard way of communicating technical information about the status of components of an IT Infrastructure.

- Management Information Bases (MIBs) of IT devices. An MIB is the database on each device that contains information about that device, including its operating system, BIOS version, configuration of system parameters, etc. The ability to interrogate MIBs and compare them to a norm is critical to being able to generate events.

- Vendor's monitoring tools agent software.

- Correlation Engines contain detailed rules to determine the significance and appropriate response to events. Details on this are provided in paragraph 4.1.5.6.

- There is no standard Event Record for all types of event. The exact contents and format of the record depend on the tools being used, what is being monitored (e.g. a server and the Change Management tools will have very different data and probably use a different format). However, there is some key data that is usually required from each event to be useful in analysis. It should typically include the:

- Device

- Component

- Type of failure

- Date/time

- Parameters in exception

- Value.

4.1.8 Metrics

For each measurement period in question, the metrics to check on the effectiveness and efficiency of the Event Management process should include the following:

- Number of events by category

- Number of events by significance

- Number and percentage of events that required human intervention and whether this was performed

- Number and percentage of events that resulted in incidents or changes

- Number and percentage of events caused by existing problems or Known Errors. This may result in a change to the priority of work on that problem or Known Error

- Number and percentage of repeated or duplicated events. This will help in the tuning of the Correlation Engine to eliminate unnecessary event generation and can also be used to assist in the design of better event generation functionality in new services

- Number and percentage of events indicating performance issues (for example, growth in the number of times an application exceeded its transaction thresholds over the past six months)

- Number and percentage of events indicating potential availability issues (e.g. failovers to alternative devices, or excessive workload swapping)

- Number and percentage of each type of event per platform or application

- Number and ratio of events compared with the number of incidents.

4.1.9 Challenges, Critical Success Factors And Risks

4.1.9.1 Challenges

There are a number of challenges that might be encountered:

- An initial challenge may be to obtain funding for the necessary tools and effort needed to install and exploit the benefits of the tools.

- One of the greatest challenges is setting the correct level of filtering. Setting the level of filtering incorrectly can result in either being flooded with relatively insignificant events, or not being able to detect relatively important events until it is too late.

- Rolling out of the necessary monitoring agents across the entire IT infrastructure may be a difficult and timeconsuming activity requiring an ongoing commitment over quite a long period of time - there is a danger that other activities may arise that could divert resources and delay the rollout.

- Acquiring the necessary skills can be time consuming and costly.

4.1.9.2 Critical Success Factors

In order to obtain the necessary funding a compelling Business Case should be prepared showing how the benefits of effective Event Management can far outweigh the costs - giving a positive return on investment.

.One of the most important CSFs is achieving the correct level of filtering. This is complicated by the fact that the significance of events changes. For example, a user logging into a system today is normal, but if that user leaves the organization and tries to log in it is a security breach.

There are three keys to the correct level of filtering, as follows:

- Integrate Event Management into all Service Management processes where feasible. This will ensure that only the events significant to these processes are reported.

- Design new services with Event Management in mind (this is discussed in detail in paragraph 4.1.10).

- Trial and error. No matter how thoroughly Event Management is prepared, there will be classes of events that are not properly filtered. Event Management must therefore include a formal process to evaluate the effectiveness of filtering.

Proper planning is needed for the rollout of the monitoring agent software across the entire IT Infrastructure. This should be regarded as a project with realistic timescales and adequate resources being allocated and protected throughout the duration of the project.

4.1.9.3 Risks

The key risks are really those already mentioned above: failure to obtain adequate funding; ensuring the correct level of filtering; and failure to maintain momentum in rolling out the necessary monitoring agents across the IT Infrastructure. If any of these risks is not addressed it could adversely impact on the success of Event Management.

4.1.10 Designing for Event Management

Effective Event Management is not designed once a service has been deployed into Operations. Since Event Management is the basis for monitoring the performance and availability of a service, the exact targets and mechanisms for monitoring should be specified and agreed during the Availability and Capacity Management processes (see Service Design publication).

However, this does not mean that Event Management is designed by a group of remote system developers and then released to Operations Management together with the system that has to be managed. Nor does it mean that, once designed and agreed, Event Management becomes static - day-to-day operations will define additional events, priorities, alerts and other improvements

that will feed through the Continual Improvement process back into Service Strategy, Service Design etc.

Service Operation functions will be expected to participate in the design of the service and how it is measured (see section 3.4).

For Event Management, the specific design areas include the following.

4.1.10.1 Instrumentation

Instrumentation is the definition of what can be monitored about CIs and the way in which their behaviour can be affected. In other words, instrumentation is about defining and designing exactly how to monitor and control the IT Infrastructure and IT services.

Instrumentation is partly about a set of decisions that need to be made and partly about designing mechanisms to execute these decisions.

Decisions that need to be made include:

- What needs to be monitored?

- What type of monitoring is required (e.g. active or passive; performance or output)?

- When do we need to generate an event?

- What type of information needs to be communicated in the event?

- Who are the messages intended for?

Mechanisms that need to be designed include:

- How will events be generated?

- Does the CI already have event generation mechanisms as a standard feature and, if so, which of these will be used?

- Are they sufficient or does the CI need to be customized to include additional mechanisms or information?

- What data will be used to populate the Event Record?

- Are events generated automatically or does the CI have to be polled?

- Where will events be logged and stored?

- How will supplementary data be gathered?

| Note: A strong interface exists here with the application's design. All applications should be coded in such a way that meaningful and detailed error messages/codes are generated at the exact point of failure - so that these can be included in the event and allow swift diagnosis and resolution of the underlying cause. The need for the inclusion and testing of such error messaging is covered in more detail in the Service Transition publication.

|

4.1.10.2 Error Messaging

Error messaging is important for all components (hardware, software, networks, etc.). It is particularly important that all software applications are designed to support Event Management. This might include the provision of meaningful error messages and/or codes that clearly indicate the specific point of failure and the most likely cause. In such cases the testing of new applications should include testing of accurate event generation.

Newer technologies such as Java Management Extensions (JMX) or HawkNLTM provide the tools for building distributed, web-based, modular and dynamic solutions for managing and monitoring devices, applications and service-driven networks. These can be used to reduce or eliminate the need for programmers to include error messaging within the code - allowing a valuable level of normalization and code-independence.

4.1.10.3 Event Detection and Alert Mechanisms

Good Event Management design will also include the design and population of the tools used to filter, correlate and escalate Events.

The Correlation Engine specifically will need to be populated with the rules and criteria that will determine the significance and subsequent action for each type of event.

Thorough design of the event detection and alert mechanisms requires the following:

- Business knowledge in relationship to any business processes being managed via Event Management

- Detailed knowledge of the Service Level Requirements of the service being supported by each CI

- Knowledge of who is going to be supporting the CI

- Knowledge of what constitutes normal and abnormal operation of the CI

- Knowledge of the significance of multiple similar events (on the same CI or various similar CIs

- An understanding of what they need to know to support the CI effectively

- Information that can help in the diagnosis of problems with the CI

- Familiarity with incident prioritization and categorization codes so that if it is necessary to create an Incident Record, these codes can be provided

- Knowledge of other CIs that may be dependent on the affected CI, or those CIs on which it depends

- Availability of Known Error information from vendors or from previous experience.

4.1.10.4 Identification of Thresholds

Thresholds themselves are not set and managed through Event Management. However, unless these are properly designed and communicated during the instrumentation process, it will be difficult to determine which level of performance is appropriate for each CI.

Also, most thresholds are not constant. They typically consist of a number of related variables. For example, the maximum number of concurrent users before response time slows will vary depending on what other jobs are active on the server. This knowledge is often only gained by experience, which means that Correlation Engines have to be continually tuned and updated through the process of Continual Service Improvement.

![[To top of Page]](../../../images/up.gif)