| 4.17 STEP | 4.2REPORTING | 4.3MEASUREMENT | 4.4ROI for CSI | 4.5QUESTIONS | 4.6SLM |

| 1Introduction | 2Serv. Mgmt. | 3Principles | 4Process | 5Methods | 6Organization | 7Consideration | 8Implementation | 9Issues | AAppendeces |

| 4.17 STEP | 4.2REPORTING | 4.3MEASUREMENT | 4.4ROI for CSI | 4.5QUESTIONS | 4.6SLM |

|

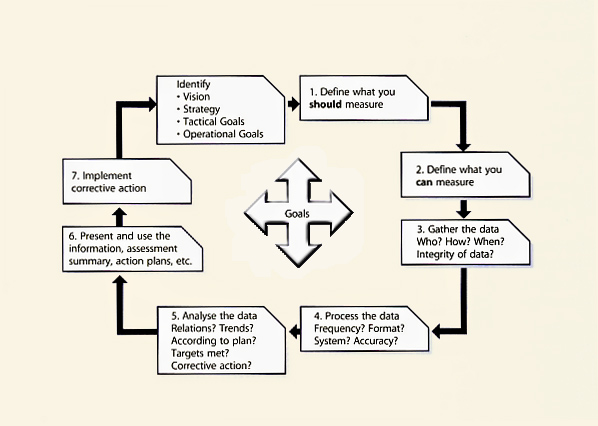

| Figure 4.1 7-Step Improvement Process |

Chapter 3 introduced the 7-Step Improvement Process shown in Figure 4.1. This chapter will go into more detail on this. What do you actually measure and where do you find the information? These are two very important questions and should not be ignored or taken lightly.

Steps 1 and 2 are directly related to the strategic, tactical and operational goals that have been defined for measuring services and service management processes as well as the existing technology and capability to support measuring and CSI activities.

Steps 1 and 2 are iterative during the rest of the activities. Depending on the goals and objectives to support service improvement activities, an organization may have to purchase and install new technology to support the gathering and processing of the data and/or hire staff with the required skills sets.

These two steps are too often ignored because:

When the data is finally presented (Step 6) without going through the rest of the steps, the results appear incorrect or incomplete. People blame each other, the vendor, the tools, anyone but themselves. Step 1 is crucial. A dialogue must take place between IT and the customer. Goals and objectives must be identified in order to properly identify what should be measured.

Based on the goals of the target audience (operational, tactical, or strategic) the service owners need to define what they should measure in a perfect world. To do this:

Identify the measurements that can be provided based on existing tool sets, organizational culture and process maturity. Note there may be a gap in what can be measured vs. what should be measured. Quantify the cost and business risk of this gap to validate any expenditures for tools.

When initially implementing service management processes don't try to measure everything, rather be selective of what measures will help to understand the health of a process. Further chapters will discuss the use of CSFs, KPIs and activity metrics. A major mistake many organizations make is trying to do too much in the beginning. Be smart about what you choose to measure.

| Question: Where do you actually find the information? Answer: Talk to the business, the customers and to IT management. Utilize the service catalogue as your starting point as well as the service level requirements of the different customers. This is the place where you start with the end in mind. In a perfect world, what should you measure? What is important to the business? |

Compile a list of what you should measure. This will often be driven by business requirements. Don't try to cover every single eventuality or possible metric in the world.

Make it simple. The number of what you should measure can grow quite rapidly. So too can the number of metrics and measurements.

Identify and link the following items:

| Question: What do you actually measure? Answer: Start by listing the tools you currently have in place. These tools will include service management tools, monitoring tools, reporting tools, investigation tools and others. Compile a list of what each tool can currently measure without any configuration or customization. Stay away from customizing the tools as much as possible; configuring them is acceptable. |

| Question: Where do you actually find the information? Answer: The information is found within each process, procedure and work instruction. The tools are merely a way to collect and provide the data. Look at existing reports and databases. What data is currently being collected and reported on? |

Perform a gap analysis between the two lists. Report this information back to the business, the customers and IT management. It is possible that new tools are required or that configuration or customization is required to be able to measure what is required.

Inputs:

Quality is the key objective of monitoring for Continual Service Improvement. Monitoring will therefore focus on the effectiveness of a service, process, tool, organization or Configuration Item (CI). The emphasis is not on assuring real-time service performance, rather it is on identifying where improvements can be made to the existing level of service, or IT performance. Monitoring for CSI will therefore tend to focus on detecting exceptions and resolutions. For example, CSI is not as interested in whether an incident was resolved, but whether it was resolved within the agreed time, and whether future incidents can be prevented.

CSI is not only interested in exceptions, though. If a Service Level Agreement is consistently met over time, CSI will also be interested in determining whether that level of performance can be sustained at a lower cost or whether it needs to be upgraded to an even better level of performance. CSI may therefore also need access to regular performance reports.

However since CSI is unlikely to need, or be able to cope with, the vast quantities of data that are produced by all monitoring activity, they will most likely focus on a specific subset of monitoring at any given time. This could be determined by input from the business or improvements to technology.

When a new service is being designed or an existing one changed, this is a perfect opportunity to ensure that what CSI needs to monitor is designed into the service requirements (see Service Design publication).

This has two main implications:

It is important to remember that there are three types of metrics that an organization will need to collect to support CSI activities as well as other process activities. The types of metrics are:

| Question: What do you actually measure? Answer: You gather whatever data has been identified as both needed and measurable. Please remember that not all data is gathered automatically. A lot of data is entered manually by people. It is important to ensure that policies are in place to drive the right behaviour to ensure that this manual data entry follows the SMART (Specific-Measurable-Achievable-Relevant-Timely) principle.

|

As much as possible, you need to standardize the data structure through policies and published standards. For example, how do you enter names in your tools - John Smith; Smith, John or J. Smith? These can be the same or different individuals. Having three different ways of entering the same name would slow down trend analysis and will severely impede any CSI initiative.

| Question: Where do you actually find the information? Answer: IT service management tools, monitoring tools, reporting tools, investigation tools, existing reports and other sources. |

Gathering data is defined as the act of monitoring and data collection. This activity needs to clearly define the following:

The answers will be different for every organization.

Service monitoring allows weak areas to be identified, so that remedial action can be taken (if there is a justifiable Business Case), thus improving future service quality. Service monitoring also can show where customer actions are causing the fault and thus lead to identifying where working efficiency and/or training can be improved.

Service monitoring should also address both internal and external suppliers since their performance must be evaluated and managed as well.

|

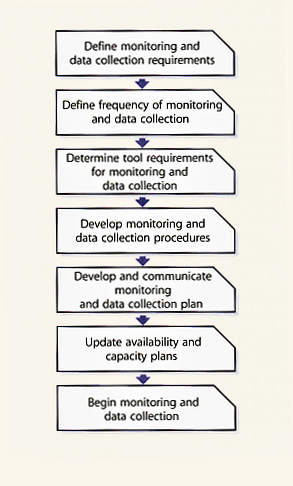

| Figure 4.1 Monitoring and data collection procedures |

Service management monitoring helps determine the health and welfare of service management processes in the following manner:

Monitoring is often associated with automated monitoring of infrastructure components for performance such as availability or capacity, but monitoring should also be used for monitoring staff behaviour such as adherence to process activities, use of authorized tools as well as project schedules and budgets.

Exceptions and alerts need to be considered during the monitoring activity as they can serve as early warning indicators that services are breaking down. Sometimes the exceptions and alerts will come from tools, but they will often come from those who are using the service or service management processes. We don't want to ignore these alerts.

Inputs to gather-the-data activity:

List of what you should measure

Figure 4.2 and Table 4.1 show the common procedures to follow in monitoring.

Outputs from gather-the-data activity:

| Tasks | Procedures |

| Task 1 |

|

| Task 2 |

|

| Task 3 |

|

| Task 4 |

|

| Task 5 |

|

| Task 6 |

|

| Task 7 |

|

| Table 4.1 Monitoring and data collection procedure | |

It is also important in this activity to look at the data that was collected and ask - does this make any sense?

ExampleAn organization that was developing some management information activities asked a consultant to review the data they had collected. The data was for Incident Management and the Service Desk. It was provided in a spreadsheet format and when the consultant opened the spreadsheet it showed that for the month the organization had opened approximately 42,000 new incident tickets and 65,000 incidents tickets were closed on the first contact. It is hard to close more incident tickets than were opened - in other words the data did not make sense.However, all is not lost. Even if the data did not make any sense, it provides insight into the ability to monitor and gather data, the tools that are used to support monitoring and data gathering and the procedures for processing the raw data into a report that can be used for analysis. When investigating the example above, it was discovered that it was a combination on how data was pulled from the tool plus human error in inputting the data into a spreadsheet. There was no check and balance before the data was actually processed and presented to key people in the organization. |

| Question: What do you actually do here? Answer: Convert the data in the required format and for the required audience. Follow the trail from metric to KPI to CSF, all the way back to the vision if necessary. See Figure 4.3. |

| Question: Where do you actually find the information? Answer: IT service management tools, monitoring tools, reporting tools, investigation tools, existing reports and other sources. |

|

| ||||||||||||||||||||||||

Once data is gathered, the next step is to process the data into the required format. Report-generating technologies are typically used at this stage as various amounts of data are condensed into information for use in the analysis activity. The data is also typically put into a format that provides an end-to-end perspective on the overall performance of a service. This activity begins the transformation of raw data into packaged information. Use the information to develop insight into the performance of the service and/or processes.

Process the data into information (i.e. create logical groupings) which provides a better means to analyze the data - the next activity step in CSI.

Inputs to processing-the-data activity:

A flow diagram is nice to look at and it gracefully summarizes the procedure but it does not contain all the required information. It is important to translate the flow diagram into a more meaningful way for people to understand the procedure with the appropriate level of detail including roles and responsibilities, timeframes, input and outputs, and more.

Examples of outputs from procedures:

Data analysis transforms the information into knowledge of the events that are affecting the organization. More skill and experience is required to perform data analysis than data gathering and processing. Verification against goals and objectives is expected during this activity. This verification validates that objectives are being supported and value is being added. It is not sufficient to simply produce graphs of various types but to document the observations and conclusions.

| Question: What do you actually analyze? Answer: Once the data is processed into information, you can then analyze the results, looking for answers to questions such as:

|

| Question: Where do you actually find the information? Answer: Here you apply knowledge to your information. Without this, you have nothing more than sets of numbers showing metrics that are meaningless. It is not enough to simply look at this month's figures and accept them without question, even if they meet SLA targets. You should analyze the figures to stay ahead of the game. Without analysis you merely have information. With analysis you have knowledge. If you find anomalies or poor results, then look for ways to improve. |

It is interesting to note the number of job titles for IT professionals that contain the word 'analyzt' and even more surprising to discover that few of them actually analyze anything. This step takes time. It requires concentration, knowledge, skills, experience etc. One of the major assumptions is that the automated processing, reporting, monitoring tool has actually done the analysis. Too often people simply point at a trend and say 'Look, numbers have gone up over the last quarter.' However, key questions need to be asked, such as:

Combining multiple data points on a graph may look nice but the real question is what does it actually mean. 'A picture is worth a thousand words' goes the saying. In analyzsing the data an accurate question would be 'Which thousand words?' To transform this data into knowledge, compare the information from step 3 against both the requirements from step 1 and what could realistically be measured from step 2.

Be sure to also compare against the clearly defined objectives with measurable targets that were set in the Service Design, Transition and Operations lifecycle stages.

Confirmation needs to be sought that these objectives and the milestones were reached. If not, have improvement initiatives been implemented? If so, then the CSI activities start again from the gathering data, processing data and analyzing data to identify if the desired improvement in service quality has been achieved. At the completion of each significant stage or milestone, a review should be conducted to ensure the objectives have been met. It is possible here to use the Post-Implementation Review (PIR) from the Change Management process. The PIR will include a review of supporting documentation and the general awareness amongst staff of the refined processes or service. A comparison is required of what has been achieved against the original goals.

During the analysis activity, but after the results are compiled and analysis and trend evaluation have occurred, it is recommended that internal meetings be held within IT to review the results and collectively identify improvement opportunities. It is important to have these internal meetings before you begin presenting and using the information which is the next activity of Continual Service Improvement. The result is that IT is a key player in determining how the results and any actions items are presented to the business.

This puts IT in a better position to formulate a plan of presenting the results and any action items to the business and to senior IT management. Throughout this publication the terms 'service' and 'service management' have been used extensively. IT is too often focused on managing the various systems used by the business, often (but incorrectly) equating service and system. A service is actually made up of systems. Therefore if IT wants to be perceived as a key player, then IT must move from a systems-based organization to a service-based organization. This transition will force the improvement of communication between the different IT silos that exist in many IT organizations.

Performing proper analysis on the data also places the business in a position to make strategic, tactical and operational decisions about whether there is a need for service improvement. Unfortunately, the analysis activity is often not done. Whether it is due to a lack of resources with the right skills and/or simply a lack of time is unclear. What is clear is that without proper analysis, errors will continue to occur and mistakes will continue to be repeated. There will be little improvement.

Data analysis transforms the information into knowledge of the events that are affecting the organization. As an example, a sub-activity of Capacity Management is workload management. This can be viewed as analyzing the data to determine which customers use what resource, how they use the resource, when they use the resource and how this impacts the overall performance of the resource. You will also be able to see if there is a trend on the usage of the resource over a period of time. From an incremental improvement process this could lead to some focus on Demand Management, or influencing the behaviour of customers.

Consideration must be given to the skills required to analyze from both a technical viewpoint and from an interpretation viewpoint.

When analyzing data, it is important to seek answers to questions such as:

It is not enough to only look at the results but also to look at what led to the results for the current period. If we had a bad month, did we have an anomaly that took place? Is this a demonstrable trend or simply a one-off?

ExampleWhen one organization started performing trend analysis activities around Incident Management, they discovered that their number of incidents increased for a one month period every three months. When they investigated the cause, they found it was tied directly to a quarterly release of an application change. This provided statistical data for them to review the effectiveness of their Change and Release Management processes as well as understand the impact each release would have on the Service Desk with the number of increased call volumes. The Service Desk was also able to begin identifying key skill sets needed to support this specific application. |

Trends are an indicator that more analysis is needed to understand what is causing it. When a trend goes up or down it is a signal that further investigation is needed to determine if it is positive or negative.

Another exampleA Change Manager communicates that the Change Management process is doing well because the volume of requests for changes has steadily decreased. Is this positive or negative? If Problem Management is working well, it could be positive as recurring incidents are removed therefore fewer changes are required as the infrastructure is more stable. However, if users have stopped submitting requests for changes because the process is not meeting expectations, the trend is negative. |

Without analysis the data is merely information. With analysis comes improvement opportunities. Throughout CSI, assessment should identify whether targets were achieved and, if so, whether new targets (and therefore new KPIs) need to be defined. If targets were achieved but the perception has not improved, then new targets may need to be set and new measures put in place to ensure that these new targets are being met.

When analyzing the results from process metrics keep in mind that a process will only be as efficient as its limited bottleneck activity. So if the analysis shows that a process activity is not efficient and continually creates a bottleneck then this would be a logical place to begin looking for a process improvement opportunity.

| Question: What do you actually measure? Answer: There are no measurements in this step. |

| Question: Where do you actually find the information? Answer: From all previous steps. |

Creating reports and presenting information is an activity that is done in most organizations to some extent or another; however it often is not done well. For many organizations this activity is simply taking the gathered raw data (often straight from the tool) and reporting this same data to everyone. There has been no processing and analysis of the data.

The other issue often associated with presenting and using information it that it is overdone. Managers at all levels are bombarded with too many e-mails, too many meetings, too many reports. Too often they are copied and presented to as part of an I-am-covering-my-you-know-what exercise. The reality is that the managers often don't need this information or at the very least, not in that format. There often is a lack of what role the manager has in making decisions and providing guidance on improvement programmes.

As we have discussed, Continual Service Improvement is an ongoing activity of monitoring and gathering data, processing the data into logical groupings, analyzing the data for meeting targets, identifying trends and identifying improvement opportunities. There is no value in all the work done to this point if we don't do a good job of presenting our findings and then using those findings to make improvement decisions.

Begin with the end in mind is habit number 2 in Stephen Covey's publication Seven Habits of Highly Effective People (Simon & Schuster, 1989). Even though the publication is about personal leadership, the habit holds true with presenting and using information. In addition to understanding the target audience, it is also important to understand the report's purpose. If the purpose and value cannot be articulated, then it is important to question if it is needed at all.

There are usually three distinct audiences:

Often there is a gap between what IT reports and what is of interest to the business. IT is famous for reporting availability in percentages such as 99.85% available. In most cases this is not calculated from an end-to-end perspective but only mainframe availability or application availability and often doesn't take into consideration LAN/WAN, server or desktop downtime. In reality, most people in IT don't know the difference between 99.95% and 99.99% availability let alone the business. Yet reports continue to show availability achievements in percentages. What the business really wants to understand is the number of outages that occurred and the duration of the outages with analysis describing the impact on the business processes, in essence, unavailability expressed in a commonly understood measure - time.

Now more than ever, IT must invest the time to understand specific business goals and translate IT metrics to reflect an impact against these goals. Businesses invest in tools and services that affect productivity, and support should be one of those services. The major challenge, and one that can be met, is to effectively communicate the business benefits of a well-run IT support group. The starting point is a new perspective on goals, measures, and reporting, and how IT actions affect business results. You will then be prepared to answer the question: 'How does IT help to generate value for your company?'

Although most reports tend to concentrate on areas where things are not going as well as hoped for, do not forget to report on the good news as well. A report showing improvement trends is IT services' best marketing vehicle. It is vitally important that reports show whether CSI has actually improved the overall service provision and if it has not, the actions taken to rectify the situation.

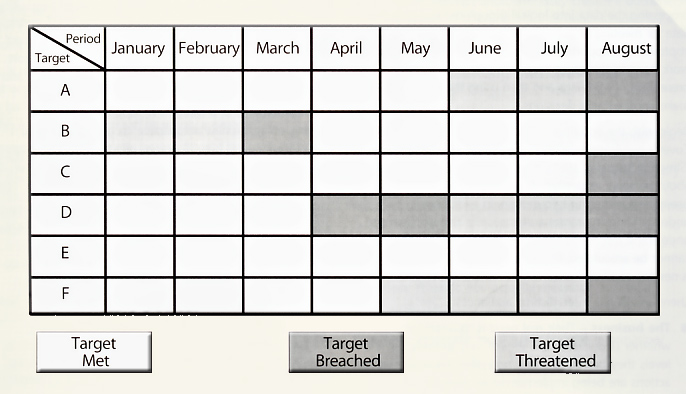

The figure below is an example of a SLA monitoring chart that provides a visual representation of an organization's ability to meet defined targets over a period of months.

|

| Figure 4.5 Service level achievement chart |

Some of the common problems associated with the presenting and reporting activity:

This is why many organizations are moving to a Balanced Scorecard or IT scorecard concept. This concept can start at the business level, then the IT level, and then functional groups and/or services within IT.

The resources required to produce, verify and distribute reports should not be under-estimated. Even with automation, this can be a time-consuming activity.

ExampleAn organization hired an expensive consulting firm to assess the maturity of the processes against the ITIL framework. The report from the consulting organization had the following observation and recommendation about the Incident Management process.The help desk is not doing Incident Management the way ITIL does. Our recommendation is that you must implement Incident Management. The reaction from the customer was simple. They fired the consulting organization. |

What would happen to you if you presented a similar observation and recommendation to your CIO?

CSI identifies many opportunities for improvement however organizations cannot afford to implement all of them. Based on goals, objectives and types of service breaches, an organization needs to prioritize improvement activities. Improvement initiatives can also be externally driven by regulatory requirements, changes in competition, or even political decisions.

If organizations were implementing corrective action according to CSI, there would be no need for this publication. Corrective action is often done in reaction to a single event that caused a (severe) outage to part or all of the organization. Other times, the squeaky wheel will get noticed and specific corrective action will be implemented in no relation to the priorities of the organization, thus taking valuable resources away from real emergencies. This is common practice but obviously not best practice.

After a decision to improve a service and/or service management process is made, then the service lifecycle continues. A new Service Strategy may be defined, Service Design builds the changes, Service Transition implements the changes into production and then Service Operation manages the day-to-day operations of the service and/or service management processes. Keep in mind that CSI activities continue through each phase of the service lifecycle.

Each service lifecycle phase requires resources to build or modify the services and/or service management processes, potential new technology or modifications to existing technology, potential changes to KPIs and other metrics and possibly even new or modified OLAs/UCs to support SLAs. Communication, training and documentation is required to transition a new/improved service, tool or service management process into production.

Often steps are forgotten or are taken for granted or someone assumes that someone else has completed the step. This indicates a breakdown in the process and a lack of understanding of roles and responsibilities. The harsh reality is that some steps are overdone while others are incomplete or overlooked.

Example of corrective action being implementedA financial organization with a strategically important website continually failed to meet its operational targets, especially with regard to the quality of service delivered by the site. The prime reason for this was their lack of focus on the monitoring of operational events, service availability and response. This situation was allowed to develop until senior business managers demanded action from the senior IT management. There were major repercussions, and reviews were undertaken to determine the underlying cause. After considerable pain and disruption, an operations group was identified to monitor this particular service. A part of the requirement was the establishment of weekly internal reviews and weekly reports on operational performance. Operational events were immediately investigated whenever they occurred and were individually reviewed after resolution. An improvement team was established, with representation from all areas, to implement the recommendations from the reviews and the feedback from the monitoring group. This eventually resulted in considerable improvement in the quality of service delivered to the business and its customers. |

There are various levels or orders of management in an organization. Individuals need to know where to focus their activities. Line managers need to show overall performance and improvement. Directors need to show that quality and performance targets are being met, while risk is being minimized. Overall, senior management need to know what is going on so that they can make informed choices and exercise judgement. Each order has its own perspective. Understanding these perspectives is where maximum value of information is leveraged.

Understanding the order your intended audience occupies and their drivers helps you present the issues and benefits of your process. At the highest level of the organization are the strategic thinkers. Reports need to be short, quick to read and aligned to their drivers. Discussions about risk avoidance, protecting the image or brand of the organization, profitability and cost savings are compelling reasons to support your improvement efforts.

The second order consists of vice presidents and directors. Reports can be more detailed, but need to summarize findings over time. Identifying how processes support the business objectives, early warning around issues that place the business at risk, and alignment to existing measurement frameworks that they use are strong methods you can use to sell the process benefits to them.

The third order consists of managers and high level supervisors. Compliance to stated objectives, overall team and process performance, insight into resource constraints and continual improvement initiatives are their drivers. Measurements and reports need to market how these are being supported by the process outputs.

Lastly at the fourth level of the hierarchy are the staff members and team leaders. At a personal level, the personal benefits need to be emphasized. Therefore metrics that show their individual performance, provide recognition of their skills (and gaps in skills) and identify training opportunities are essential in getting these people to participate in the processes willingly.

CSI is often viewed as an ad hoc activity within IT services. The activity usually kicks in when someone in IT management yells loud enough. This is not the right way to address CSI. Often these reactionary events are not even providing continual improvement, but simply stopping a single failure from occurring again.

CSI takes a commitment from everyone in IT working throughout the service lifecycle to be successful at improving services and service management processes. It requires ongoing attention, a well-thought-out plan, consistent attention to monitoring, analyzing and reporting results with an eye toward improvement. Improvements can be incremental in nature but also require a huge commitment to implement a new service or meet new business requirements.

This section spelled out the seven steps of CSI activities. All seven steps need attention. There is no reward for taking a short cut or not addressing each step in a sequential nature. If any step is missed, there is a risk of not being efficient and effective in meeting the goals of CSI.

IT services must ensure that proper staffing and tools are identified and implemented to support CSI activities. It is also important to understand the difference between what should be measured and what can be measured. Start small - don't expect to measure everything at once. Understand the organizational capability to gather data and process the data. Be sure to spend time analyzing data as this is where the real value comes in. Without analysis of the data, there is no real opportunity to truly improve services or service management processes. Think through the strategy and plan for reporting and using the data. Reporting is partly a marketing activity. It is important that IT focus on the value added to the organization as well as reporting on issues and achievements. In order for steps 5 to 7 to be carried out correctly, it is imperative that the target audience is considered when packaging the information.

An organization can find improvement opportunities throughout the entire service lifecycle. An IT organization does not need to wait until a service or service management process is transitioned into the operations area to begin identifying improvement opportunities.

Service Design monitors and gathers data associated with creating and modifying (design efforts) of services and service management processes. This part of the service lifecycle also measures against the effectiveness and ability to measure CSFs and KPIs that were defined through gathering business requirements. Service Design also defines what should be measured. This would include monitoring project schedules, progress to project milestones, and project results against goals and objectives.

Service Transition develops the monitoring procedures and criteria to be used during and after implementation. Service Transition monitors and gathers data on the actual release into production of services and service management processes. It is the responsibility of Service Transition to ensure that the services and service management processes are embedded in a way that can be managed and maintained according to the strategies and design efforts. Service transition develops the monitoring procedures and criteria to be used during and after implementation.

Service Operation is responsible for the actual monitoring of services in the production environment. Service Operation plays a large part in the processing activity. Service Operation provides input into what can be measured and processed into logical groupings as well as doing the actual processing of the data. Service Operation would also be responsible for taking the component data and processing it in the format to provide a better end-to-end perspective of the service achievements.

CSI receives the collected data as input in the remainder of CSI activities.

|

| Figure 4.7 Lifecycle integration diagram |

SLM plays a key role in the data gathering activity as SLM is responsible for not only defining business requirements but also IT's capabilities to achieve them.

Availability and Capacity Management

Incident Management and Service Desk

Security Management

Security Management contributes to monitoring and data collection in the following manner:

Financial Management

Financial Management is responsible for monitoring and collecting data associated with the actual expenditures vs. budget and is able to provide input on questions such as: are costing or revenue targets on track? Financial Management should also monitor the ongoing cost per service etc.

In addition Financial Management will provide the necessary templates to assist CSI to create the budget and expenditure reports for the various improvement initiatives as well as providing the means to compute the ROI of the improvements.

Role Of Other Processes In Measuring The Data

Service Level Management - SLM supports the CSI processing data activity in the following manner:

Availability and Capacity Management

Incident Management and Service Desk

Analyzing the Data Throughout the Service Lifecycle

Service Strategy analyzes results associated with implemented strategies, policies and standards. This would include identifying any trends, comparing results against goals and also identifying any improvement opportunities.

Service Design analyzes current results of design and project activities. Trends are also noted with results compared against the design goals. Service Design also identifies improvement opportunities and analyzes the effectiveness and ability to measure CSFs and KPIs that were defined when gathering business requirements. Service Operation analyzes current results as well as trends over a period of time. Service Operation also identifies both incremental and large-scale improvement opportunities, providing input into what can be measured and processed into logical groupings. This area also performs the actual data processing. Service Operation would also be responsible for taking the component data and processing it in the format to provide a better end-to-end perspective of service achievements.

If there is a CSI functional group within an organization, this group can be the single point for combining all analysis, trend data and comparison of results to targets. This group could then review all proposed improvement opportunities and help prioritize the opportunities and finally make a consolidated recommendation to senior management. For smaller organizations, this may fall to an individual or smaller group acting as a coordinating point and owning CSI. This is a key point. Too often data is gathered in the various technical domains ... never to be heard from again. Designating a CSI group provides a single place in the organization for all the data to reside and be analyzed.

Role Of Other Processes In Analyzing the Data Service Level Management

SLM supports the CSI process data activity in the following manner:

Incident Management and Service Desk

Problem Management

Problem Management plays a key role in the analysis activity as this process supports all the other processes with regards to trend identification and performing root cause analysis. Problem Management is usually associated with reducing incidents, but a good Problem Management process is also involved in helping define process-related problems as well as those associated with services.

Overall, Problem Management seeks to:

Security Management

Security Management as a function relies on the activities of all other processes to help determine the cause of security related incidents and problems. The Security Management function will submit requests for changes to implement corrections or for new updates to, say, the antivirus software. Other processes such as Availability (confidentiality, integrity, availability and recoverability), Capacity (capacity and performance) and Service Continuity Management (planning on how to handle crisis) will lend a hand in planning longer term.

In turn Security Management will play a key role in assisting CSI regarding all security aspects of improvement initiatives or for security-related improvements.

Presenting and using the information throughout the service lifecycle

Role of other processes in presenting and using the information

Service Level Management - SLM presents information to the business and discusses the service achievements for the current time period as well as any longer trends that were identified. These discussions should also include information about what led to the results and any incremental or fine-tuning actions required.

Overall, SLM:

Availability and Capacity Management

Incident Management and Service Desk

Problem Management

Role of other Processes in Implementing Corrective Action

Change Management - When CSI determines that an improvement to a service is warranted, an RFC must be submitted to Change Management. In turn Change Management treats the RFC like any other RFC. The RFC is prioritized and categorized according to policies and procedures defined in the Change Management process. Release Management, as a part of Service Transition, is responsible for moving this change to the production environment. Once the change is implemented, CSI is part of the PIR to assess the success or failure of the change.

Representatives from CSI should be part of the CAB and the CAB/EC. Changes have an effect on service provision and may also affect other CSI initiatives. As part of the CAB and CAB/EC, CSI is in a better position to provide feedback and react to upcoming changes.

Service Level Management - The SLM process often generates a good starting point for a service improvement plan (SIP) - and the service review process may drive this. Where an underlying difficulty that is adversely impacting service quality is identified, SLM must, in conjunction with Problem Management and Availability Management, instigate a SIP to identify and implement whatever actions are necessary to overcome the difficulties and restore service quality. SIP initiatives may also focus on such issues as training, system testing and documentation. In these cases, the relevant people need to be involved and adequate feedback given to make improvements for the future. At any time, a number of separate initiatives that form part of the SIP may be running in parallel to address difficulties with a number of services.

Some organizations have established an up-front annual budget held by SLM from which SIP initiatives can be funded.

If an organization is outsourcing Service Delivery to a third party, the issue of service improvement should be discussed at the outset and covered (and budgeted for) in the contract, otherwise there is no incentive during the lifetime of the contract for the supplier to improve service targets.

There may be incremental improvement or large-scale improvement activities within each stage of the service lifecycle. As already mentioned, one of the activities IT management have to address is prioritization of service improvement opportunities.

In general, a metric is a scale of measurement defined in terms of a standard, i.e. in terms of a well-defined unit. The quantification of an event through the process of measurement relies on the existence of an explicit or implicit metric, which is the standard to which measurements are referenced.

Metrics are a system of parameters or ways of quantitative assessment of a process that is to be measured, along with the processes to carry out such measurement. Metrics define what is to be measured. Metrics are usually specialized by the subject area, in which case they are valid only within a certain domain and cannot be directly benchmarked or interpreted outside it. Generic metrics, however, can be aggregated across subject areas or business units of an enterprise.

Metrics are used in several business models including CMMI They are used in Knowledge Management (KM). These measurements or metrics can be used to track trends, productivity, resources and much more. Typically, the metrics tracked are KPIs.

How many CSFs and KPIs?

The opinions on this are varied. Some recommended that no more than two to three KPIs are defined per CSF at any given time and that a service or process has no more that two to three CSFs associated with it at any given time while others recommend upwards of four to five. This may not sound much but when considering the number of services, processes or when using the Balanced Scorecard approach, the upper limit can be staggering!

It is recommended that in the early stages of a CSI programme only two to three KPIs for each CSF are defined, monitored and reported on. As the maturity of a service and service management processes increase, additional KPIs can be added. Based on what is important to the business and IT management the KPIs may change over a period of time. Also keep in mind that as service management processes are implemented this will often change the KPIs of other processes. As an example, increasing first-contact resolution is a common KPI for Incident Management. This is a good KPI to begin with, but when you implement Problem Management this should change. One of Problem Management's objectives is to reduce the number of recurring incidents. When these types of recurring incidents are reduced this will reduce the number of first-contact resolutions. In this case a reduction in first-contact resolution is a positive trend.

The next step is to identify the metrics and measurements required to compute the KPI. There are two basic kinds of KPI, qualitative and quantitative.

| Here is a qualitative example: | |

| CSF: | Improving IT service quality |

| KPI: | 10 percent increase in customer satisfaction rating for handling incidents over the next 6 months. |

| Metrics required: |

|

| Measurements: |

|

| Here is a quantitative example: | |

| CSF: | Reducing IT costs |

| KPI: | 10 percent reduction in the costs of handling printer incidents. |

| Metrics required: |

|

| Measurements: |

|

An important aspect to consider is whether a KPI is fit for use. Key questions are:

To become acquainted with the possibilities and limitations of your measurement framework, critically review your performance indicators with the above questions in mind before you implement them.

Tension Metrics

The effort from any support team is a balancing act of three elements:

The delivered product or service therefore represents a balanced trade-off between these three elements. Tension metrics can help create that balance by preventing teams from focusing on just one element - for example, on delivering the product or service on time. If an initiative is being driven primarily towards satisfying a business driver of on-time delivery to the exclusion of other factors, the manager will achieve this aim by flexing the resources and service features in order to meet the delivery schedule. This unbalanced focus will therefore either lead to budget increases or lower product quality. Tension metrics help create a delicate balance between shared goals and delivering a product or service according to business requirements within time and budget. Tension metrics do not, however, conflict with shared goals and values, but rather prevent teams from taking shortcuts and shirking on their assignment. Tension metrics can therefore be seen as a tool to create shared responsibilities between team members with different roles in the service lifecycle.

Goals and Metrics

Each phase of the service lifecycle requires very specific contributions from the key roles identified in Service Design, Service Transition and Service Operation, each of which has very specific goals to meet. Ultimately, the quality of the service will be determined by how well each role meets its goals, and by how well those sometimes conflicting goals are managed along the way. That makes it crucial that organizations find some way of measuring performance - by applying a set of metrics to each goal.

Breaking down goals and metrics

It is really outside the scope of this publication to dig too deeply into human resources management, and besides, there is no shortage of literature already available on the subject. However, there are some specific things that can be said about best practices for goals and metrics as they apply to managing services in their lifecycle.

Many IT service organizations measure their IT professionals on an abstract and high-level basis. During appraisal and counselling, most managers discuss such things as 'taking part in one or more projects/performing activities of a certain kind', or 'fulfilling certain roles in projects/activities' and 'following certain courses'. Although accomplishing such goals might be important for the professional growth of an individual, it does not facilitate the service lifecycle or any specific process in it. In reality, most IT service organizations do not use more detailed performance measures that are in line with key business drivers, because it is difficult to do, and do correctly.

But there is a way. In the design phase of a service, key business drivers were translated into service level requirements (SLRs) and operations level requirements, the latter consisting of process, skills and technology requirements. What this constitutes is a translation from a business requirement into requirements for IT services and IT components. There is also the question, of the strategic position of IT. In essence, the question is whether IT is an enabler or a cost centre, the answer to which determines the requirements for the IT services and IT components. The answer also determines how the processes in the service lifecycle are executed, and how the people in the organization should behave. If IT is a cost centre, services might be developed to be used centrally in order to reduce Total cost of ownership (TCO). Services will have those characteristics that will reduce total costs of ownership throughout the lifecycle. On the other hand, if IT is an enabler, services will be designed to flexibly adjust to changing business requirements and meet early timeto-market objectives.

Either way, the important point is that those requirements for IT services and IT components would determine how processes in the lifecycle are measured and managed, and thus how the performance and growth of professionals should be measured.

Best practice shows that goals and metrics can be classified into three categories: financial metrics, learning and growth metrics, and organizational or process effectiveness metrics. An example of financial metrics might be the expenses and total percentage of hours spent on projects or maintenance, while an example of learning and growth might be the percentage of education pursued in a target skill area, certification in a professional area, and contribution to Knowledge Management. These metrics will not be discussed in this publication.

The last type of metrics, organizational or process effectiveness metrics, can be further broken down into product quality metrics and process quality metrics. Product quality metrics are the metrics supporting the contribution to the delivery of quality products. Examples of product quality metrics are shown in the following table. Process quality metrics are the quality metrics related to efficient and effective process management.

Using Organizational Metrics

To be effective, measurements and metrics should be woven through the complete organization, touching the strategic as well as the tactical level. To successfully support the key business drivers, the IT services manager needs to know what and how well each part of the organization contributes to the final success.

It is also important, when defining measurements for goals that support the IT services strategy, to remember that measurements must focus on results and not on efforts. Focus on the organizational output and try to get clear what the contribution is. Each stage in the service lifecycle has its processes and contribution to the service. Each stage of the lifecycle also has its roles, which contribute to the development or management of the service. Based on the process goals and the quality attributes of the service, goals and metrics can be defined for each role in the processes of the lifecycle.

| Measure | Metric | Quality goal | Lower limit | Upper limit |

| Schedule | % variation against revised plan | Within 7.5% of estimate | Not to be less than 7.5% of estimate | Not to exceed 7.5% of estimate |

| Effort | % variation against revised plan | Within 10% of estimate | Not to be less than 10% of estimate | Not to exceed 10% of estimate |

| Cost | % variation against revised plan | Within 10% of estimate | Not to be less than 10% of estimate | Not to exceed 10% of estimate |

| Defects | % variation against planned defect | Within 10% of estimate | Not to be less than 10% of estimate | Not to exceed 10% of estimate |

| Productivity | % variation against productivity goal | Within 10% of estimate | Not to be less than 10% of estimate | Not to exceed 10% of estimate |

| Customer satisfaction | Customer satisfaction survey result | Greater than 8.9 on the range of 1 to 10 | Not to be less than 8.9 on the range of 1 to 10 | |

| Table 4.3 Examples of service quality metrics | ||||

|

|