Service Transition

4. Service Transition Processes

4.5 Service Validation And Testing

The underlying concept to which Service Testing and Validation contributes is quality assurance - establishing that the Service Design and release will deliver a new or changed service or service offering that is fit for purpose and fit for use. Testing is a vital area within Service Management and has often been the unseen underlying cause of what was taken to be inefficient Service Management processes. If services are not tested sufficiently then their introduction into the operational environment will bring a rise in:

- Incidents, since failures in service elements and mismatches between what was wanted and what was delivered impact on business support

- Service desk calls for clarification, since services that are not functioning as intended are inherently less intuitive causing a higher support requirement

- Problems and errors that are harder to diagnose in the live environment

- Costs, since errors are more expensive to fix in production than if found in testing

- Services that are not used effectively by the users to deliver the desired value.

4.5.1 Purpose, Goal And Objectives

The purpose of the Service Validation and Testing process is to:

- Plan and implement a structured validation and test process that provides objective evidence that the new or changed service will support the customer's business and stakeholder requirements, including the agreed service levels

- Quality assure a release, its constituent service components, the resultant service and service capability delivered by a release

- Identify, assess and address issues, errors and risks throughout Service Transition.

The goal of Service Validation and Testing is to assure that a service will provide value to customers and their business.

The objectives of Service Validation and Testing are to:

- Provide confidence that a release will create a new or changed service or service offerings that deliver the expected outcomes and value for the customers within the projected costs, capacity and constraints

- Validate that a service is 'fit for purpose' - it will deliver the required performance with desired constraints removed

- Assure a service is 'fit for use' - it meets certain specifications under the specified terms and conditions of use

- Confirm that the customer and stakeholder requirements for the new or changed service are correctly defined and remedy any errors or variances early in the service lifecycle as this is considerably cheaper than fixing errors in production.

4.5.2 Scope

The service provider takes responsibility for delivering, operating and/or maintaining customer or service assets at specified levels of warranty, under a service agreement. Service Validation and Testing can be applied throughout the service lifecycle to quality assure any aspect of a service and the service providers' capability, resources and capacity to deliver a service and/or service release successfully. In order to validate and test an end-to-end service the interfaces to suppliers, customers and partners are important. Service provider interface definitions define the boundaries of the service to be tested, e.g. process interfaces and organizational interfaces.

Testing is equally applicable to in-house or developed services, hardware, software or knowledge-based services. It includes the testing of new or changed services or service components and examines the behaviour of these in the target business unit, service unit, deployment group or environment. This environment could have aspects outside the control of the service provider, e.g. public networks, user skill levels or customer assets.

Testing directly supports the release and deployment process by ensuring that appropriate levels of testing are performed during the release, build and deployment activities. It evaluates the detailed service models to ensure that they are fit for purpose and fit for use before being authorized to enter Service Operations, through the service catalogue. The output from testing is used by the evaluation process to provide the information on whether the service is independently judged to be delivering the service performance with an acceptable risk profile.

4.5.3 Value To Business

Service failures can harm the service provider's business and the customer's assets and result in outcomes such as loss of reputation, loss of money, loss of time, injury and death. The key value to the business and customers from Service Testing and Validation is in terms of the established degree of confidence that a new or changed service will deliver the value and outcomes required of it and understanding the risks.

Successful testing depends on all parties understanding that it cannot give, indeed should not give, any guarantees but provides a measured degree of confidence. The required degree of confidence varies depending on the customer's business requirements and pressures of an organization.

4.5.4 Policies, Principles And Basic Concepts

4.5.4.1 Inputs From Service Design

A service is defined by a service package that comprises one or more service level packages (SLPs) and re-usable components, many of which themselves are services, e.g. supporting services. The service package defines the service utilities and warranties that are delivered through the correct functioning of the particular set of identified service assets. An SLP provides a definitive level of utility or warranty from the perspective of outcomes, assets and patterns of business activity (PBA) of customers. It is therefore a key input to test planning and design.

The design of a service is related to the context in which a service will be used (the categories of customer asset). The attributes of a service characterize the form and function of the service from a utilization perspective.

These attributes should be traceable to the predicted business outcomes that provide the utility from the service. Some attributes are more important than others for different sets of users and customers, e.g. basic, performance and excitement attributes. A well-designed service provides a combination of these to deliver an appropriate level of utility for the customer.

|

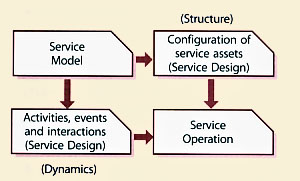

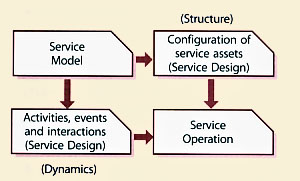

Figure 4.26 Service models describe the structure

and dynamicsof a service |

The Service Design Package defines the agreed requirements of the service, expressed in terms of the service model and Service Operations plan that provide key input to test planning and design. Service models are described further in the Service Strategy publication.

The service model (Figure 4.26) describes the structure and dynamics of a service that will be delivered by Service Operations, through the Service Operations plan. Service Transition evaluates these during the validation and test stages.

Structure is defined in terms of particular core and supporting services and the service assets needed and the patterns in which they are configured. As the new or changed service is designed, developed and built, the service assets are tested and verified against the requirements and design specifications: is the service asset built correctly?

For example, the design for managed storage services must have input on how customer assets such as business applications utilize the storage, the way in which storage adds value to the applications, and what costs and risks the customer would like to avoid. The information on risks is of particular importance to service testing as this will influence the test coverage and prioritization.

|

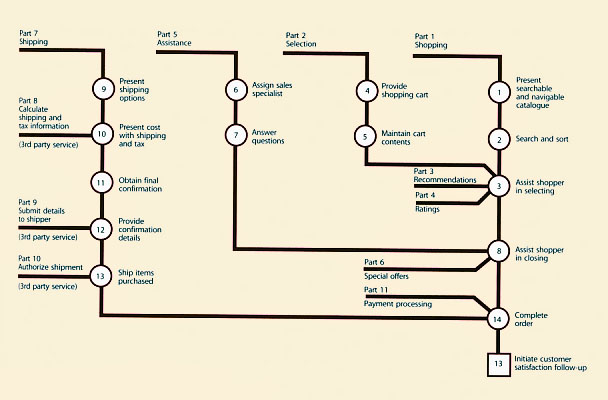

| Figure 4.27 Dynamics of a service model |

Service models also describe the dynamics of creating value. Activities, flow of resources, coordination, and interactions describe the dynamics (see Figure 4.27). This includes the cooperation and communication between

service users and service agents such as service provider staff, processes or systems that the user interacts with, for example, a self-service menu. The dynamics of a service include patterns of business activity, demand patterns, exceptions and variations.

Service Design uses process maps, workflow diagrams, queuing models, and activity patterns to define the service models. As Service Transition evaluates the detailed service models to ensure they are fit for purpose and fit for use it is important to have access to these models to develop the test models and plans.

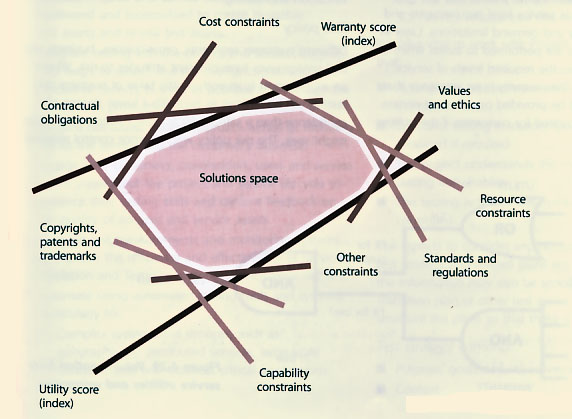

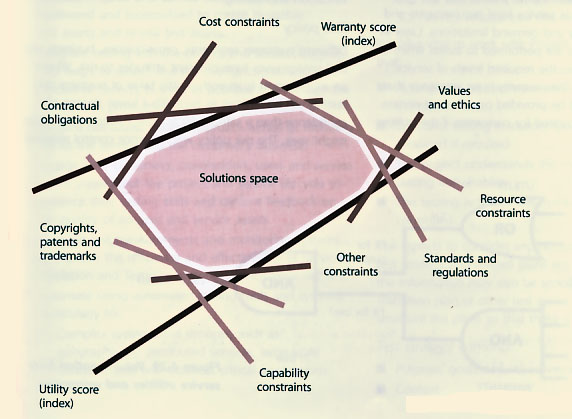

The Service Design package defines a set of design constraints (Figure 4.28) against which the service release and new or changed service will be developed and built.

Validation and testing should test the service at the boundaries to check that the design constraints are correctly defined and particularly if there is a design improvement to add or remove a constraint.

|

| Figure 4.28 Design Constraints of a Service |

4.5.4.2 Service Quality And Assurance

Service assurance is delivered though verification and validation, which in turn are delivered through testing (trying something out in conditions that represent the final live situation - a test environment) and by observation or review against a standard or specificationR.

Validation confirms, through the provision of objective evidence, that the requirements for a specific intended use or application have been fulfilled. Validation in a lifecycle context is the set of activities ensuring and gaining confidence that a system or service is able to accomplish its intended use, goals and objectives.

The validation of the service requirements and the related service Acceptance Criteria begins from the time that the service requirements are defined. There will be increasing levels of service validation testing performed as a service release progresses through the service lifecycle.

Verification is confirmation, through the provision of objective evidence, that specified requirements have been fulfilled, e.g. a service asset meets its specification.

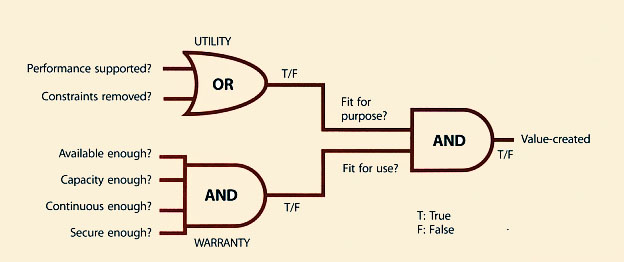

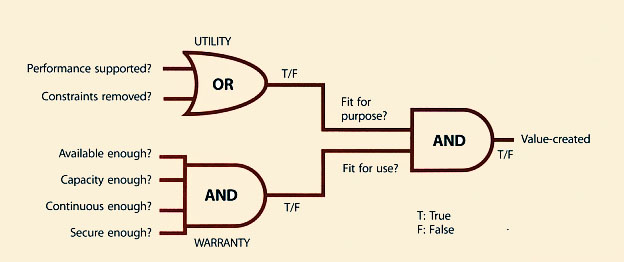

Early in the service lifecycle, validation confirms that

the customer needs, contracts and service attributes, specified in the service package, are translated correctly into the Service Design as service level requirements and constraints, e.g. capacity and demand limitations. Later in the service lifecycle tests are performed to assess whether the actual service delivers the required levels of service, utilities and warranties. The warranty is an assurance that a product or service will be provided or will meet certain specifications. Value is created for customers if the utilities

are fit for purpose and the warranties are fit for use (Figure 4.29). This is the focus of service validation.

4.5.4.3 Policies

Policies that drive and support Service Validation and Testing include:

- Service Quality Policy

Senior leadership will define the meaning of service quality. Service Strategy discusses the quality perspectives that a service provider needs to consider. In addition to service level metrics, service quality takes into account the positive impact of the service (utility) and the certainty of impact warranty. The Service Strategy publication outlines four quality perspectives:

- Level of excellence

- Value for money

- Conformance to specifications

- Meeting or exceeding expectations.

One or more, if not all four, perspectives are usually required to guide the measurement and control of Service Management processes. The dominant perspective will influence how services are measured and controlled, which in turn will influence how services are designed and operated. Understanding the quality perspective will influence the Service Design and the approach to validation and testing.

- Risk Policy

Different customer segments, organizations, business units and service units have different attitudes to risk. Where an organization is an enthusiastic taker of business risk, testing will be looking to establish a lower degree of confidence than a safety critical or regulated organization might seek. The risk policy will influence control required through Service Transition including the degree and level of validation and testing of service level requirements, utility and warranty, i.e. availability risks, security risks, continuity risks and capacity risks.

|

| Figure 4.29 Value creation from service utilities and warranties |

- Service Transition Policy

See Chapter 3.

- Release Policy

The type and frequency of releases will influence the testing approach. Frequent releases such as once-a-day drive requirements for re-usable test models and automated testing.

- Change Management Policy

The use of change windows can influence the testing that needs to be considered. For example if there is a policy of 'substituting' a release package late in the change schedule or if the scheduled release package is delayed then additional testing may be required to test this combination if there are dependencies.

The testing policy will reflect the requirements from Service Strategy. Examples of policy statements include:

- Test Library and Re-Use Policy. The nature of IT Service Management is repetitive, and benefits greatly from re-use. The service test management role within an organization should take responsibility for creating, cataloguing and maintaining a library of test models, test cases, test scripts and test data that can be re-used. Projects and service teams need to be motivated and incentivized to create re-usable test assets and re-use test assets.

- Integrate testing into the project and service lifecycle. This helps to detect and remove functional and nonfunctional defects as soon as possible and reduces the incidents in production.

- Adopt a risk-based testing approach aimed at reducing risk to the service and the customer's business.

- Engage with customers, stakeholders, users and service teams throughout the project and service lifecycle to enhance their testing skills and capture feedback on the quality of services and service assets.

- Establish test measurements and monitoring systems to improve the efficiency and effectiveness of Service Validation and Testing Continual Service Improvement.

- Automate using automated testing tools and systems, particularly for:

- Complex systems and services, such as geographically distributed services, large-scale infrastructures and business critical applications

- Where time to change is critical, e.g. if there are tight deadlines and a tendency to squeeze testing windows.

4.5.4.4 Test Strategy

A test strategy defines the overall approach to organizing testing and allocating testing resources. It can apply to the whole organization, a set of services or an individual service. Any test strategy needs to be developed with appropriate stakeholders to ensure there is sufficient buyin to the approach.

Early in the lifecycle the service validation and test role needs to work with Service Design and service evaluation to plan and design the test approach using information from the service package, SLPs, SDP and the interim evaluation report. The activities will include:

- Translating the Service Design into test requirements and test models, e.g. understanding combinations of service assets required to deliver a service as well as the constraints that define the context, approach and boundaries to be tested

- Establishing the best approach to optimize the test coverage given the risk profile and change impact and resource assessment

- Translating the service Acceptance Criteria into entry and exit criteria at each level of testing to define the acceptable level of margin for errors at each level

- Translating risks and issues from the impact, resource and risk assessment on the related RFC for the SDP/service release into test requirements.

It is also vital to work with Project Managers to ensure that:

- Appropriate test activities and resources are included in Project Plans

- Specialist testing resources (people, tools, licences) are allocated if required

- The project understands the mandatory and optional testing deliverables

- The testing activities are managed, monitored and controlled.

The aspects to consider and document in developing the test strategy and related plans are shown below. Some of the information may also be specified in the Service Transition plan or other test plans and it is important to structure the plans so that there is minimal duplication.

Test Strategy Contents

- Purpose, goals and objectives of service testing

- Context

- Applicable standards, legal and regulatory requirements

- Applicable contracts and agreements:

- Service Management policies, processes and standards

- Policies, processes and practices applicable to testing

- Scope and organizations:

- Service provider teams

- Test organization

- Third parties, strategic partners, suppliers

- Business units/locations

- Customers and users

- Test process:

- Test management and control - recording, progress monitoring and reporting

- Test planning and estimation, including cost estimates for service planning, resources, scheduling

- Test preparation, e.g. site/environment preparation, installation prerequisites

- Test activities - planning, performing and documenting test cases and results

- Test metrics and improvement

- Identification of items to be tested:

- Service package

- Service level package

- SDP - service model (structure and dynamics), solution architecture design

- Service Operation plan

- Service Management Plans:

- Critical elements where business priorities and risk assessment suggest testing should concentrate

- Business units, service units, locations where the tests will be performed

- Service provider interfaces

- Approach:

- Selecting the test model

- Test levels

- Test approaches, e.g. regression testing, modelling, simulation

- Degree of independence for performing, analysing and evaluating tests

- Re-use - experience, expertise, knowledge and historical data

- Timing, e.g. focus on testing individual service assets early vs testing later when the whole service

- Developing and re-using test designs, tools, scripts and data

- Error and change handling and control

- Measurement system

- Criteria:

- Pass/fail criteria

- Entry and exit criteria for each test stage

- For stopping or re-starting testing activities

- People requirements:

- Roles and responsibilities including approval/rejection (these may be at different levels, e.g. rejecting an expensive and long running project typically requires higher authority than accepting it as planned)

- Assigning and scheduling training and knowledge transfer

- Stakeholders - service provider, suppliers, customer, user involvement

- Environment requirements:

- Test environments to be used, locations, organizational, technical

- Requirements for each test environment

- Planning and commissioning of test environment

- Deliverables:

- Mandatory and optional documentation

- Test plans

- Test specifications - test design, test case, test procedure

- Test results and reports

- Validation and qualification report

- Test summary reports.

4.5.4.5 Test Models

A test model includes a test plan, what is to be tested and the test scripts that define how each element will be tested. A test model ensures that testing is executed consistently in a repeatable way that is effective and efficient. The test scripts define the release test conditions, associated expected results and test cycles.

To ensure that the process is repeatable, test models need to be well structured in a way that:

- Provides traceability back to the requirement or design criteria

- Enables auditability through test execution, evaluation and reporting

- Ensures the test elements can be maintained and changed.

Examples of test models are illustrated in Table 4.10.

| Test model | Objective/target deliverable | Test conditions based on

|

| Service contract test model | To validate that the customer can use the service to deliver a value proposition. | Contract requirements. Fit for purpose, fit for User criteria.

|

| Service requirements test model | To validate that the service provider can/has delivered the service required and expected by the customer. | Service requirements and Service Acceptance Criteria.

|

| Service level test model | To ensure that the service provider can deliver the service level requirements, and service level requirements can be met in the production environment, e.g. testing the response and fix time, availability, product delivery times, support services. | Service level requirements, SLA, OLA. Service model.

|

| Service test model | To ensure that the service provider is capable of delivering, operating and managing the new or changed service using the 'as-designed' service model that includes the resource model, cost model, integrated process model, capacity and performance model etc. | Service model.

|

| Operations test model | To ensure that the Service Operations teams can operate and support the new or changed service/service component including the service desk, IT operations, application management, technical management. It includes local IT support staff and business representatives responsible for IT service support and operations. There may be different models at different release/test levels, e.g. technology infrastructure, applications. | Service model, service operations standards, processes and plans.

|

| Deployment release test model | To verify that the deployment team, tools and procedures can deploy the release package into a target deployment group or environment within the estimated timeframe. To ensure that the release package contains all the service components required for deployment, e.g. by performing a configuration audit. | Release and deployment design and plan.

|

| Deployment installation test model | To test that the deployment team, tools and procedures can install the release package into a target environment within the estimated timeframe. | Release and deployment design and plan

|

| Deployment verification test model | To test that a deployment has completed successfully and that all service assets and configurations are in place as planned and meet their quality criteria. | Tests and audits of 'actual' service assets and configurations.

|

| Table 4.10 Examples of service test models |

As the Service Design phase progresses, the tester can use the emerging Service Design and release plan to determine the specific requirements, validation and test

conditions, cases and mechanisms to be tested. An example is shown in Table 4.11.

| Validation reference | Validation condition | Test levels | Test case | Mechanism

|

| 1.1 | 20% improvement in user survey rating | 1 | M020 | Survey

|

| 1.2 | 20% reduction in user complaints | 1 | M023 | Process metrics

|

| 1.3 | 20% increase in use of self service channel | 2 | M123 | Usage statistics

|

| 1.4 | Help function available on front page of self

service point application | 3 | T235 | Functional test

|

| 1.5 | Web pages comply with web accessibility

standards | 4 (Application) | T201 | Usability test

|

| 1.6 | 10% increase in public self service points | 4/5 Technical infrastructure | T234 | Installation statistics

|

| 1.7 | Public self-service points comply with standard IS1223 | 4/5 Technical infrastructure | T234 | Compliance test

|

| Table 4.11 Service requirements, 1: improve user accessibility and usability |

4.5.4.6 Validation And Testing Perspectives

Effective validation and testing focuses on whether the service will deliver as required. This is based on the perspective of those who will use, deliver, deploy, manage and operate the service. The test entry and exit criteria are developed as the Service Design Package is developed. These will cover all aspects of the service provision from different perspectives including:

- Service Design - functional, management and operational

- Technology design

- Process design

- Measurement design

- Documentation

- Skills and knowledge.

Service acceptance testing starts with the verification

of the service requirements. For example, customers, customer representatives and other stakeholders who sign off the agreed service requirements will also sign off the service Acceptance Criteria and service acceptance test plan. The stakeholders include:

- Business customers/customer representatives

- Users of the service within the customer's business who will use the new or changed service to assist them in delivering their work objectives and deliver service and/or product to their customers

- Suppliers

- Service provider/service unit.

Business Users And Customer Perspective

The business involvement in acceptance testing is central to its success, and is included in the Service Design package, enabling adequate resource planning.

From the business's perspective this is important in order to:

- Have a defined and agreed means for measuring the acceptability of the service including interfaces with the service provider, e.g. how errors or queries are communicated via a single point of contact, monitoring progress and closure of change requests and incidents

- Understand and make available the appropriate level and capability of resource to undertake service acceptance.

From the service provider's perspective the business involvement is important to:

- Keep the business involved during build and testing of the service to avoid any surprises when service acceptance takes place

- Ensure the overall quality of the service delivered into acceptance is robust, since this starts to set business perceptions about the quality, reliability and usability of the system, even before it goes live

- Deliver and maintain solid and robust acceptance test facilities in line with business requirements

- . Understand where the acceptance test fits into any overall business service or product development testing activity.

Even when in live operation, a service is not 'emotionally' accepted by customer and user until they become familiar and content with it. The full benefit of a service will not be realized until that emotional acceptance has been achieved.

Emotional (non) acceptance

Southern US Steel Mill implemented a new order manufacturing service. It was commissioned, designed and delivered by an outside vendor. The service delivered was innovative and fully met the agreed criteria. The end result was that the company sued the vendor citing that the service was not usable because factory personnel (due to lack of training) did not know how to use the system and therefore emotionally did not accept it.

|

Testing is a situation where 'use cases', focusing on the usable results from a service can be a valuable aid to effective assessment of a service's usefulness to the business.

User Testing - Application, System, Service

Testing is comprised of tests to determine whether the service meets the functional and quality requirements of the end users (customers) by executing defined business processes in an environment that, as closely as possible, simulates the live operational environment. This will include changes to the system or business process. Full details of the scope and coverage will be defined in the user test and user acceptance test (UAT) plans. The end users will test the functional requirements, establishing to the customer's agreed degree of confidence that the service will deliver as they require. They will also perform tests of the Service Management activities that they are involved with, e.g. ability to contact and use the service desk, response to diagnostics scripts, incident management, request fulfilment, change request management.

A key practice is to make sure that business users participating in testing have their expectations clearly set and realize that this is a test and to expect that some things may not go well. There is a risk that they may form an opinion too early about the quality of the service being tested and word may spread that the quality of the service is poor and should not be used.

Operations And Service Improvement Perspective

Steps must be taken to ensure that IT staff requirements have been delivered before deployment of the service.

Operations staff will use the service acceptance step to ensure that appropriate:

- Technological facilities are in place to deliver the new or changed service

- Staff skills, knowledge and resource are available to support the service after go-live

- Supporting processes and resources are in place, e.g. service desk, second/third line support, including third party contracts, capacity and availability monitoring and alerting

- Business and IT continuity has been considered

- Access is available to documentation and SKMS.

Continual Service Improvement will also inherit the new

or changed service into the scope of their improvement programme, and should satisfy themselves that they have sufficient understanding of its objectives and characteristics.

4.5.4.7 Levels Of Testing And Test Models

Testing is related directly to the building of service assets and products so that each one has an associated acceptance test and activity to ensure it meets requirements. This involves testing individual service assets and components before they are used in the new or changed service.

Each service model and associated service deliverable is supported by its own re-usable test model that can be used for regression testing during the deployment of a specific release as well as for regression testing in future releases. Test models help with building quality early into the service lifecycle rather than waiting for results from tests on a release at the end.

Levels of build and testing are described in the release and deployment section (paragraph 4.4.5.3). The levels of testing that are to be performed are defined by the selected test model.

Using a model such as the V-model (Figure 4.30) builds in Service Validation and Testing early in the service lifecycle. It provides a framework to organize the levels of configuration items to be managed through the lifecycle and the associated validation and testing activities both within and across stages.

The level of test is derived from the way a system is designed and built up. This is known as a V-model, which maps the types of test to each stage of development. The V-model provides one example of how the Service Transition levels of testing can be matched to corresponding stages of service requirements and design.

|

| Figure 4.30 Example of service V-model |

The left-hand side represents the specification of the service requirements down to the detailed Service Design. The right-hand side focuses on the validation activities that are performed against the specifications defined on the left-hand side. At each stage on the left-hand side, there is direct involvement by the equivalent party on the right-hand side. It shows that service validation and acceptance test planning should start with the definition of the service requirements. For example, customers who sign off the agreed service requirements will also sign off the service Acceptance Criteria and test plan.

4.5.4.8 Testing Approaches And Techniques

There are many approaches that can be combined to conduct validation activities and tests, depending on the constraints. Different approaches can be combined to the requirements for different types of service, service model, risk profile, skill levels, test objectives and levels of testing. Examples include:

- Document review

- Modelling and measuring - suitable for testing the service model and Service Operations plan

- Risk-based approach that focuses on areas of greatest risk, e.g. business critical services, risks identified in change impact analysis and/or service evaluation

- Standards compliance approach, e.g. international or national standards or industry specific standards

- Experience-based approach, e.g. using subject matter experts in the business, service or technical arenas to provide guidance on test coverage

- Approach based on an organization's lifecycle methods, e.g. waterfall, agile

- Simulation

- Scenario testing

- Role playing

- Prototyping

- Laboratory testing

- Regression testing

- Joint walkthrough/workshops

- Dress/service rehearsal

- Conference room pilot

- Live pilot.

In order to optimize the testing resources, test activities must be allocated against service importance, anticipated business impact and risk. Business impact analyses carried out during design for business and service continuity management and availability purposes are often very relevant to establishing testing priorities and schedules and should be available, subject to confidentiality and security concerns.

4.5.4.9 Design Considerations

Service test design aims to develop test models and test cases that measure the correct things in order to establish whether the service will meet its intended use within the specified constraints. It is important to avoid focusing too much on the lower level components that are often easier to test and measure. Adopting a structured approach to scoping and designing the tests helps to ensure that priority is given to testing the right things. Test models must be well structured and repeatable to facilitate auditability and maintainability.

The service is designed in response to the agreed business and service requirements and testing aims to identify if these have been achieved. Service validation and test designs consider potential changes in circumstances and are flexible enough to be changed. They may need to be changed after failures in early service tests identify a change in the environment or circumstances and therefore a change on the testing approach.

Design considerations are applicable for service test models, test cases and test scripts and include:

Business/Organization:

- Alignment with business services, processes and procedures

- Business dependencies, priorities, criticality and impact

- Business cycles and seasonal variations

- Business transaction levels

- The numbers and types of users and anticipated future growth

- Possible requirements due to new facilities and functionality

- Business scenarios to test the end to end service

Service architecture and performance:

- Service Portfolio/structure of the services, e.g. core service, supporting and underpinning supplier services

- Options for testing different type of service assets, utilities and warranty, e.g. availability, security, continuity

- Service level requirements and service level targets

- Service transaction levels

- Constraints

- Performance and volume predictions

- Monitoring, modelling and measurement system, e.g. is there a need for significant simulation to recreate peak business periods? Will the new or changed service interface with existing monitoring and management tools?

Service release test environment requirements

- Service Management:

- Service Management models, e.g. capacity, cost, performance models

- Service Operations model

- Service support model

- Changes in requirements for Service Management information

- Changes in volumes of service users and transactions

Application information and data:

- Validating that the application works with the information/databases and technical infrastructure

- Functionality testing to test the behaviour of the infrastructure solution and verify: i) no conflicts in versions of software, hardware or network components; and ii) common infrastructure services used according to the design

- Access rights set correctly

Technical infrastructure:

- Physical assets - do they meet their specifications?

- Technical resource capacity, e.g. storage, processing power, power, network bandwidth

- Spares - are sufficient spares available or ordered and scheduled for delivery? Are hardware/software settings recorded and correct?

Aspects that generally need to be considered in designing service tests include:

- Finance - Is the agreed budget adequate, has spending exceeded budget, have costs altered (e.g. software licence and maintenance charge increases)?

- Documentation - Is all necessary documentation available or scheduled for production, is it practicable (sufficiently intuitive for the intended audience, available in all required languages), in correct formats such as checklists, service desk scripts?

- Supplier of the service, service asset, component - What are the internal or external interfaces?

- Build - Can the service, service asset or component be built into a release package and test environments?

- Testable - Is it testable with the resources, time and facilities available or obtainable?

- Traceability - What traceability is there back to the requirements?

- Where and when could testing take place? Are there unusual conditions under which a service might need to run that should be tested?

- Remediation - What plans are there to remediate or back out a release through the environments?

Awareness of current technological environments for different types of business, customer, staff and user is essential to maintaining a valid test environment. The design of the test environments must consider the current and anticipated live environment when the service is due for operational handover and for the period of its expected operation. In practice, for most organizations, looking more than six to nine months into the business or technological future is about the practical limit. In some sectors, however, much longer lead times require the need to predict further into the future, even to the extent of restricting technological innovation in the interests of thorough and expansive testing - examples are military systems, NASA and other safety critical environments.

Designing the management and maintenance of test data needs to address relevant issues such as:

- Separation of test data from any live data, including steps to ensure that test data cannot be mistaken for live data when being used, and vice versa (there are many real-life examples of live data being copied and used as test data and being the basis for business decisions e.g. desktop icons pointing at the wrong database)

- Data protection regulations - when live data is used to generate a test database; if information can be traced to individuals it may well be covered by data protection legislation, which for example may forbid its transportation between countries

- Backup of test data, and restoration to a known baseline to enable repeatable testing; this also applies to initiation conditions for hardware tests that should be baselined

- Volatility of test data and test environments, processes and procedures, which should be in place to quickly build and tear down the test environment for a variety of testing needs and so care must be taken to ensure that testing activities for one group do not compromise testing activities for another group

- Balancing cost and benefit - as test environments populated with relevant data are expensive to build and to maintain, so the benefits in terms of risk reduction to the business services must be balanced against the cost of provision. Also, how closely the test environment matches live production is a key consideration that needs to be weighed balancing cost with risk.

4.5.4.10 Types Of Testing

The following types of test are used to verify that the service meets the user and customer requirements as well as the service provider's requirements for managing, operating and supporting the service. Care must be taken to establish the full range of likely users, and then to test all the aspects of the service, including support and reporting.

Functional testing will depend on the type of service and channel of delivery. Functional testing is covered in many testing standards and best practices (see Further information).

Service testing will include many non-functional tests. These tests can be conducted at several test levels to help build up confidence in the service release. They include:

- Usability testing

- Accessibility testing

- Process and procedure testing

- Knowledge transfer and competence testing

- Performance, capacity and resilience testing

- Volume, stress, load and scalability testing

- Availability testing

- Backup and recovery testing

- Coherency testing

- Compatibility testing

- Documentation testing

- Regulatory and compliance testing

- Security testing

- Logistics, deployability and migration testing

- Coexistence and compatibility testing

- Remediation, continuity and recovery testing

- Configuration, build and installability testing

- Operability and maintainability testing.

There are several types of testing from different perspectives, which are described below.

|

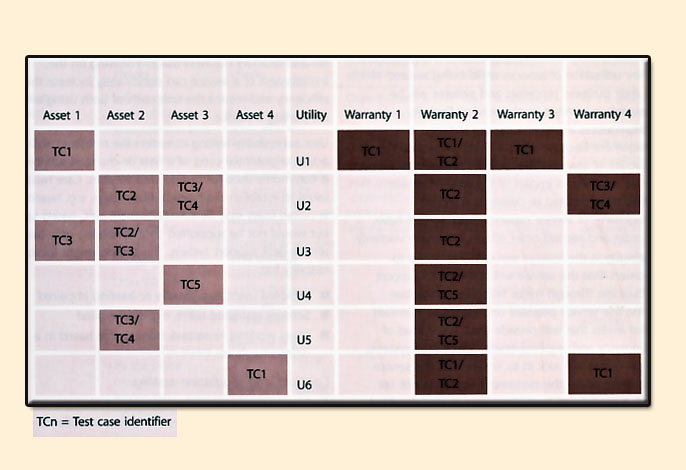

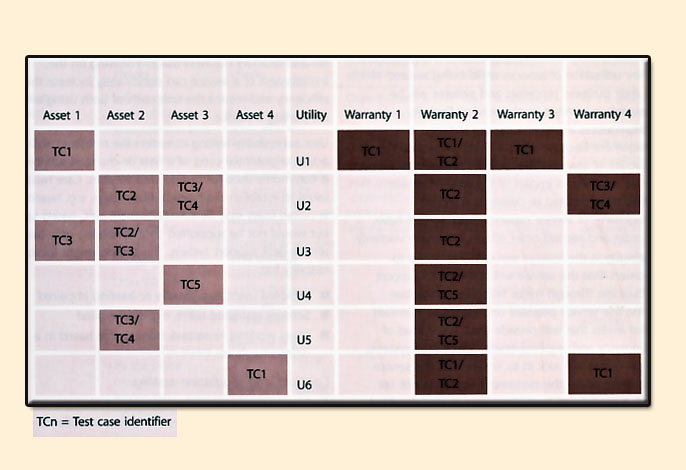

| Figure 4.31 Designing tests to cover range of service assets, utilities and warranties |

Service requirements and structure testing - service provider, users and customers

Validation of the service attributes against the contract, service package and service model includes evaluating the integration or 'fit' of the utilities across the core and supporting services and service assets to ensure there is complete coverage and no conflicts.

Figure 4.31 shows a matrix of service utility to service warranty and the service assets that support each utility. This matrix is one that can be used to design the service tests to ensure that the service structure and test design coverage is appropriate. Service tests cases are designed to test the service requirements in terms of utility, capacity, resource utilization, finance and risks. For example approaches to testing the risk of service failure include performance, stress, usability and security testing.

Service level testing - service level managers, operations managers and customers

Validate that the service provider can deliver the service level requirements, e.g. testing the response and fix time, availability, product delivery times and support services.

The performance from a service asset should deliver the utility or service expected. This is not necessarily that the asset can deliver what it should be capable of in its own right. For example a car's factory specification may assert that it is capable of 150kph, but for most customers delivering 100kph willfully meet the requirement.

Warranty And Assurance Tests - Fit For Use Testing

As discussed earlier in this section, the customers see the service delivered in terms of warranties against the utilities that add value to their assets in order to deliver the expected business support. For any service, the warranties are expressed in measurable terms that enable tests to be designed to establish that the warranty can be delivered (within the agreed degree of confidence). The degree of detail may vary considerably, but will always reflect the agreement established during Service Design. In all cases the warranty will be described, and should be measurable, in terms of the customer's business and the potential effects on it of success or failure of the service to meet that warranty.

The following tests are used to provide confidence that the warranties can be delivered, i.e. the service is fit for use:

- Availability is the most elementary aspect of assuring value to customers. It assures the customer that services will be available for use under agreed terms and conditions. Services are expected to be made available to designated users only within specified areas, locations and time schedules.

- Capacity is an aspect of service warranty that assures the customer that a service will support a specified level of business activity or demand at a specified level of service quality. Customers can make changes to their utilization of services while being assured that their business processes and systems will be adequately supported by the service. Capacity management is a critical aspect of Service Management because it has a direct impact on the availability of services. The capacity available to support services also has an impact of the level of service continuity committed or delivered. Effective management of service capacity can therefore have first-order and second-order effects on service warranty.

- Continuity is the level of assurance provided to customers that the service will continue to support the business through major failures or disruptive events. The service provider undertakes to maintain service assets that will provide a sufficient level of contingency and responsiveness. Specialized systems and processes will kick in to ensure that the service levels received by the customer's assets do not fall below a predefined level. Assurance is also provided that normal service levels will be restored within a predefined time limit to limit the overall impact of a failure or event. The effectiveness of service continuity is measured in terms of disturbance to the productive state of customer assets.

- Security assures that the utilization of services by customers will be secure. This means that customer assets within the scope of service delivery and support will not be exposed to certain security risks. Service providers undertake to implement general and servicelevel controls that will ensure that the value provided to customers is complete and not eroded by any avoidable costs and risks. Service security covers the following aspects of reducing risks:

- Authorized and accountable usage of services as specified by customer

- Protection of customers' assets from unauthorized or malicious access

- Security zones between customer assets and service assets

- Plays a supporting role to the other three aspects of service warranty

- When effective has a positive impact on those aspects.

Service security inherits all the general properties of the security of physical and human assets, as well

as intangibles such as data, information, coordination and communication.

Usability - Users And Maintainers

Usability testing is likely to be of increasing importance as more services become widely used as a part of everyday life and ordinary business usage. Focusing on the intuitiveness of a service can significantly increase the efficiency and reduce the unit costs of both using and supporting a service.

User accessibility testing considers the restricted abilities of actual or potential users of a new or changed service and is commonly used for testing web services. Care must be taken to establish the types of likely users, e.g. hearing impaired users may be able to operate a PC-based service but would not be supported by a telephone-only-based service-desk support system. This testing might focus on usability for:

- Disabled users, e.g. visually or hearing impaired

- Sensory restricted users, e.g. colour-blind

- Users working in second language or based in a different culture.

Contract And Regulation Testing

Audits and tests are conducted to check that the criteria in contracts have been accepted before acceptance of the end-to-end service. Service providers may have a contractual requirement to comply with the requirements of ISO/IEC 20000 or other standards and they would need to ensure that the relevant clauses of the standard are met during implementation of a new or changed service and release.

Regulatory acceptance testing is required in some industries such as defence, financial services and pharmaceuticals.

Compliance Testing

Testing is conducted to check compliance against internal regulations and existing commitments of the organization, e.g. fraud checks.

Service Management Testing

The service models will dictate the approach to testing the integrated Service Management processes. ISO/IEC 20000 covers the minimum requirements for each process to be compliant with the standard and maintenance of the process interrelationships.

Examples of Service Management manageability tests are shown in Table 4.12.

| Service Management functions | Examples of

|

| design phase manageability checks

| build phase manageability checks

| operating manageability checks

| deployment phase manageability checks

| early life support and CSI manageability checks

|

| Configuration Management | Are the designers aware of the corporate standards used for

Configuration Management?

How does the design meet organizational standards for acceptable configurations?

Does the design support the concept of version control?

Is the design created in a way that allows for the logical breakdown of the service into configuration items (CIs)?

| Have the developers built the service, application and infrastructure to conform to the corporate standards that are used for Configuration Management?

Does the service use only standard supporting systems and tools that are considered acceptable?

Does the service include support for version, build, baseline and release control and management?

Have the developers built in the chosen Cl structure to the service, application and infrastructure?

| Does the service deployment update the CMS at each stage of the rollout?

Is the deployment team using an updated inventory to complete the plan and the deployment?

| Can the operations team gain access to the CMS so that they can confirm the service they are managing is the correct version and configured correctly?

Are the operating instructions under version and build control similar to those used for the application builds?

| As the service is reviewed within the optimize phase, is the CMS used to assist with the review?

Are Configuration Management personnel involved in the optimization process, including providing advice in the use of and updating the inventory?

|

| Change Management

| Does the Service Design cope with change?

Do the designers understand the Change Management process used by the organization? | Have the service assets and components been built and tested against the corporate Change Management process?

Has the emergency change process been tested?

Is the impact assessment procedure for the Cl type clearly defined and has it been tested? | Are the corporate Change Management process and standards used during deployment? | Is the operations team involved in the Change Management process; is it part of the sign-off and verification process?

Does a member of the operations team attend the Change Management meetings? | As modifications are identified within this phase, does the team use the Change Management system to coordinate the changes?

Does the optimization team understand the Change Management process?

|

| Release and Deployment Management | Do the service designers understand the standards and tools used for releasing and deploying services?

How will the design ensure that the new or changed service can be deployed into the environment in a simple and efficient way? | Has the service, application and infrastructure been built and tested

in ways that ensure I can be released into the environment in a simple and efficient way? | Is the service being deployed in a manner that minimizes risks, such as a phased deployment?

Has a remediation/ back-out option been included in the release package or process for the service and its constituent components? | Does the release and deployment process ensure

that deployment information is available to the operations teams?

Do the Service Operations teams have access to release and information even before the service or application is deployed into the live environment? | Do members of the CSI team understand the release process, and are they using this for planning the deployment of improvements?

Is Release and Deployment Management involved in providing advice to the assessment process?

|

| Security management | How does the design ensure that the service is designed with security in the forefront? | Is the build process following security best practice for this activity? | Can the service be deployed in a manner that meets organizational security standards and requirements? | Does the service support the ongoing and periodic checks that security management needs to complete while the service is in operational use? |

|

| Incident management | Does the design facilitate simple creation of incidents when something goes wrong?

Is the design compatible with the organizational

incident management system?

Does the design accommodate automatic logging and detection of incidents? | Is a simple creation-of-incidents process, for when something goes wrong, built into services and tested (e.g. notification from applications)?

Has the compatibility with the

organizational

incident management system been tested? | Does the deployment use the incident management system for reporting issues and problems?

Do the members of the deployment team have access to the incident management system so that they can record incidents and also view incidents that relate to the deployment? |

Does the operations team have access to the incident management system and can it update information within this system?

Does the operations team understand its responsibilities in dealing with incidents?

Is the operations team provided with reports on how well it deals with incidents, and does it act on these? |

Do members of the CSI team have access to the incident management system so that they can record incidents and also view incidents that may be addressed in optimization?

|

| Problem management | How does the design facilitate the methods used for root cause analysis used within the organization? | Has the method of providing information to facilitate root cause analysis and problem management been tested? | Has a problem manager been appointed for this deployment and does the deployment team know who it is? | Does the operations team contribute to the problem management process, ideally by assisting with and facilitating root cause analysis? Does the operations team meet problem management staff regularly? Does the operations team see the weekly/ monthly problem management report? | Is the optimization process being provided with information by problem

management to incorporate into the assessment process?

|

| Capacity management | Are the designers aware of the approach to capacity management used within the organization? How to measure operations and performance? Is modelling being used to ensure that the design meets capacity needs? | Has the service been built and tested to ensure that it meets the capacity requirements? Has the capacity information provided by the service been tested and verified?

Are stress and volume characteristics built into the services and constituent applications?

| Is capacity management involved in the deployment process so that it can monitor the capacity of the resources involved in the deployment? | Is capacity management information being monitored and reported on as this service is used, and is this information provided to capacity management? | Is capacity management feeding information into the optimization process?

|

| Availability management | Does the design address the availability requirements of the service?

Has the service been designed to fit in with backup and recovery capabilities of the organization? |

How has the service been built to address the availability requirements, and how has this been tested?

What testing has been done to ensure that the service meets the backup and recovery capabilities of the organization?

What happens when the service and underlying applications are under stress? | Is availability management monitoring the availability of the service, the applications being deployed and the rest of the technology infrastructure to ensure that the deployment is not affecting availability?

How is the ability to back up and recover the service during deployment being dealt with? |

How is the service's availability being measured, and is this information being fed back to the availability management function within the IT organization? |

Does the assessment use the availability information to complete the proposal of modifications that are needed for the service?

Is any improvement required in the service's ability to be backed up and recovered?

|

| Service continuity management | How does the design meet the service continuity requirements of the organization? Will the design meet the needs of the business recovery process following a disaster? | Has the service been built to support the business recovery process following a disaster, and how has this been tested? | Will any changes be required to the business recovery process following a disaster if one should occur during or after the deployment of this service? | Is the business recovery process for the service tested regularly by operations? | What optimization is required in the business recovery process to meet the business needs?

|

| Service level management | How does the design meet the SLA requirements of the organization? | Does the service meet the SLA and performance requirements, and has this been tested? | Is service level management aware of the deployment of this service?

Does this service have an initial SLA for the deployment phase?

Does the service affect the SLA requirements during deployment? | Is the SLA visible and understood by the operations team so that it appreciates how its running of the service affects the delivery of the SLA?

Does operations see the weekly/monthly service level report? | Is service level management information available for inclusion in the optimization process?

|

| Financial management | Does the design meet the financial requirements for this service? How does the design ensure that the final new or changed service will meet return of investment expectations? | Has the service been built to deliver financial information, and how is this being tested? | Is management accounting being done during the deployment so that the total cost of deployment can be included within the cost of ownership? | Does operations provide input into the financial information about the service? For example, if a service requires an operator to perform additional tasks at night, is this recorded? | Is financial information available to be included in the assessment process?

|

| Table 4.12 Examples of Service Management manageability tests |

Operational Tests - Systems, Services

There will be many operational tests depending on the type of service. Typical tests include:

Load and stress - These tests establish if the new or changed service will perform to the required levels on the capacity likely to be available. The capacity elements may include any anticipated bottlenecks within the infrastructure that might be expected to restrict performance, including:

- Load and throughput

- Behavior at the upper limits of system capability

- Network bandwidth

- Data storage

- Processing power or live memory

- Service desk resources - people and technology such as telephone lines and logging

- Available software licences/concurrent seats

- Support staff - both numbers and skills

- Training facilities, classrooms, trainers, CBT licences etc.

- Overnight batch processing timings, including backup tasks.

Security - All services should be considered for their potential impact on relevant security concerns, and subsequently tested for their actual likely impact on security. Any service that has an anticipated security impact or exposes an anticipated security risk will have been assessed at design stage, and the requirement for security involvement built into the service package. Organizations should make reference to and may wish to seek compliance with ISO 27000 where security is a significant concern to their services.

Recoverability - Every significant change will have been assessed for the question 'If this change is made, will the Disaster Recovery (DR) plan need to be changed accordingly'. Notwithstanding that consideration earlier in the lifecycle, it is appropriate to test that the new or changed service is catered for within the existing (or amended with the changed) DR plan. Typically, concerns identified during testing should be addressed to the service continuity team and considered as active elements for future DR tests.

Regression Testing

Regression testing means 'repeating a test already run successfully, and comparing the new results with the earlier valid results'. On each iteration of true regression testing, all existing, validated tests are run, and the new results are compared with the already-achieved standards. Regression testing ensures that a new or changed service does not introduce errors into aspects of the services or IT infrastructure that previously worked without error. Simple examples of the type of error that can be detected are software contention issues, hardware and network incompatibility. Regression testing also applies to other elements such as Service Management process testing and measurement. In reality it is the integrated concept of service testing - assessing whether the service will deliver the business benefit - that makes regression testing so very important in modern organizations, and will make it ever more important.

4.5.5 Process Activities, Methods And Techniques

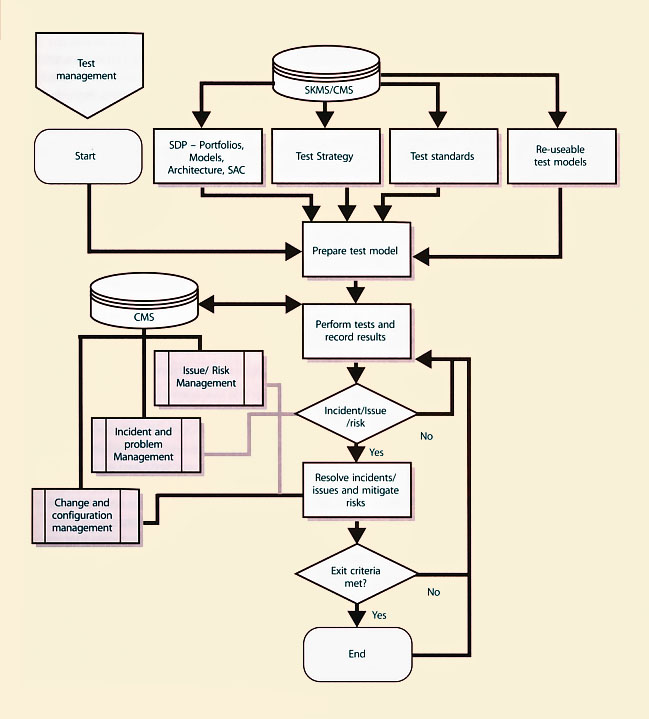

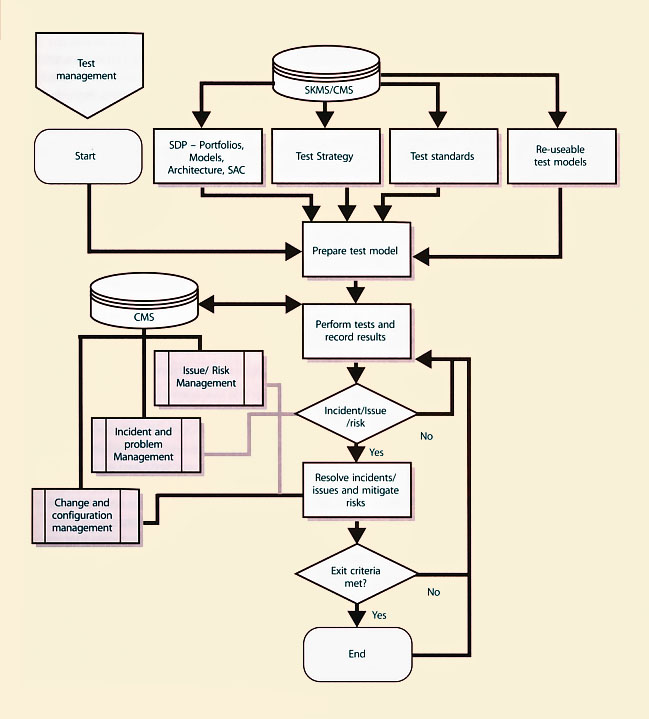

The testing process is shown schematically in Figure 4.32. The test activities are not undertaken in a sequence. Several activities may be done in parallel, e.g. test execution begins before all the test design is complete. The activities are described below.

|

| Figure 4.32 Example of a validation and testing process |

- Validation and Test Management

Test management includes the planning, control and reporting of activities through the test stages of Service Transition. These activities include:

- Planning the test resources

- Prioritizing and scheduling what is to be tested and when

- Management of incidents, problems, errors, non conformances, risks and issues

- Checking that incoming known errors and their documentation are processed

- Monitoring progress and collating feedback from validation and test activities

- Management of incidents, problems, errors, non conformances, risks and issues discovered during transition

- Consequential changes, to reduce errors going into production

- Capturing configuration baseline

- Test metrics collection, analysis, reporting and management.

Test management includes managing issues, mitigating risks and implementing changes identified from the testing activities as these can impose delays and create dependencies that need to be proactively managed.

Test metrics are used to measure the test process and manage and control the testing activities. They enable the test manager to determine the progress of testing, the earned value and the outstanding testing, and this helps the test manager to estimate when testing will be completed. Good metrics provide information for management decisions that are required for prioritization, scheduling and risk management. They also provide useful information for estimating and scheduling for future releases.

- Plan And Design Test

Test planning and design activities start early in the service lifecycle and include:

- Resourcing

- Hardware, networking, staff numbers and skills etc. capacity

- Business/customer resources required, e.g. components or raw materials for production control services, cash for ATM services

- Supporting services including access, security, catering, communications

- Schedule of milestones, handover and delivery dates

- Agreed time for consideration of reports and other deliverables

- Point and time of delivery and acceptance

- Financial requirements - budgets and funding.

- Verify test plan and test design

Verify the test plans and test design to ensure that:

- The test model delivers adequate and appropriate test coverage for the risk profile of the service

- The test model covers the key integration aspects and interfaces, e.g. at the SPIs

- That the test scripts are accurate and complete.

- Prepare Test Environment

Prepare the test environment by using the services of the build and test environment resource and also use the release and deployment processes to prepare the test environment where possible; see paragraph 4.4.5.2. Capture a configuration baseline of the initial test environment.

- Perform Tests

Carry out the tests using manual or automated techniques and procedures. Testers must record their findings during the tests. If a test fails, the reasons for failure must be fully

documented. Testing should continue according to the test plans and scripts, if at all possible. When part of a test fails, the incident or issues should be resolved or documented (e.g. as a known error) and the appropriate re-tests should be performed by the same tester.

An example of the test execution activities is shown in Figure 4.33. The deliverables from testing are:

- Actual results showing proof of testing with cross references to the test model, test cycles and conditions

- Problems, errors, issues, non-conformances and risks remaining to be resolved

- Resolved problems/known errors and related changes

- Sign-off.

- Evaluate Exit Criteria and Report

The actual results are compared to the expected results. The results may be interpreted in terms of pass/fail; risk to the business/service provider; or if there is a change in a projected value, e.g. higher cost to deliver intended benefits.

To produce the report, gather the test metrics and summarize the results of the tests. Examples of exit criteria are:

- The service, with its underlying applications and technology infrastructure, enables the business users to perform all aspects of function as defined.

- The service meets the quality requirements.

- Configuration baselines are captured into the CMS.

- Test Clean Up and Closure

Ensure that the test environments are cleaned up or initialized. Review the testing approach and identify improvements to input to design/build, buy/build decision parameters and future testing policy/procedures.

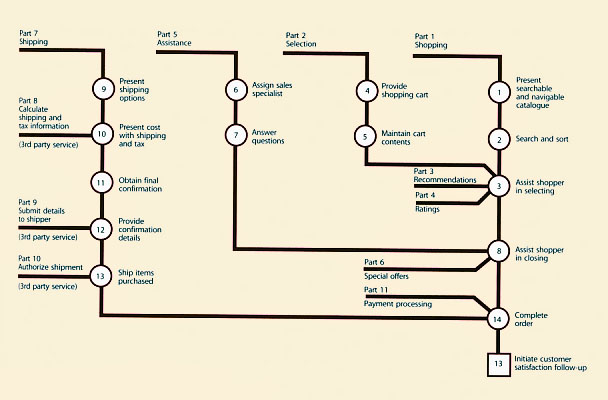

4.5.6 Trigger, Input And Outputs, And Inter-process Interfaces

|

| Figure 4.33 Example of perform test activities |

4.5.6.1 Trigger

The trigger for testing is a scheduled activity on a release plan, test plan or quality assurance plan.

4.5.6.2 Inputs

The key inputs to the process are:

- The service package - This comprises a core service package and re-usable components, many of which themselves are services, e.g. supporting service. It defines the service's utilities and warranties that are

delivered through the correct functioning of the particular set of identified service assets. It maps the demand patterns for service and user profiles to SLPs.

- SLP - One or more SLPs that provided a definitive level of utility or warranty from the perspective of outcomes, assets, patterns of business activity of customers (PBA).

Service provider interface definitions - These define the interfaces to be tested at the boundaries of the service being delivered, e.g. process interfaces, organizational interfaces.

- The Service Design package - This defines the agreed requirements of the service, expressed in terms of the service model and Service Operations plan. It includes:

- Operation models (including support resources, escalation procedures and critical situation handling procedures)

- Capacity/resource model and plans - combined with performance and availability aspects

- Financial/economic/cost models (with TCO, TCU)

- Service Management model (e.g. integrated process model as in ISO/IEC 20000)

- Design and interface specifications.

- Release and deployment plans - These define the order that release units will be deployed, built and installed.

- Acceptance Criteria - These exist at all levels at which testing and acceptance are foreseen.

- RFCs - These instigate required changes to the environment within which the service functions or will function.

4.5.6.3 Outputs

The direct output from testing is the report delivered to service evaluation (see section 4.6). This sets out:

- Configuration baseline of the testing environment

- Testing carried out (including options chosen and constraints encountered)

- Results from those tests

- Analysis of the results, e.g. comparison of actual results with expected results, risks identified during testing activities.

After the service has been in use for a reasonable time there should be sufficient data to perform an evaluation of the actual vs predicted service capability and performance. If the evaluation is successful, an evaluation report is sent to Change Management with a recommendation to promote the service release out of early life support and into normal operation.

Other outputs include:

- Updated data, information and knowledge to be added to the service knowledge management system, e.g. errors and workarounds, testing techniques, analysis methods

- Test incidents, problems and error records

- Improvement ideas for Continual Service Improvement to address potential improvements in any area that impacts on testing:

- To the testing process itself

- To the nature and documentation of the Service Design outputs

- Third party relationships, suppliers of equipment or services, partners (co-suppliers to end customers), users and customers or other stakeholders.

4.5.6.4 Interfaces To Other Lifecycle Stages

Testing supports all of the release and deployment steps within Service Transition.

Although this chapter focuses on the application of testing within the Service Transition phase, the test strategy will ensure that the testing process works with all stages of the lifecycle:

Working with Service Design to ensure that designs are inherently testable and providing positive support in achieving this; examples range from including selfmonitoring within hardware and software, through the re-use of previously tested and known service elements through to ensuring rights of access to third party suppliers to carry out inspection and observation on delivered service elements easily

- Working closely with CSI to feed failure information and improvement ideas resulting from testing exercises

- Service Operation will use maintenance tests to ensure the continued efficacy of services; these tests will require maintenance to cope with innovation and change in environmental circumstances

- Service Strategy should accommodate testing in terms of adequate funding, resource, profile etc.

4.5.7 Information Management

The nature of IT Service Management is repetitive, and this ability to benefit from re-use is recognized in the suggested use of transition models. Testing benefits greatly from re-use and to this end it is sensible to create and maintain a library of relevant tests and an updated and maintained data set for applying and performing tests. The test management group within an organization should take responsibility for creating, cataloguing and maintaining test scripts, test cases and test data that can be re-used.

Similarly, the use of automated testing tools (Computer Aided Software Testing - CAST) is becoming ever more central to effective testing in complex software environments. Equivalently standard and automated hardware testing approaches are fast and effective.

Test data

However well a test has been designed, it relies on the relevance of the data used to run it. This clearly applies strongly to software testing, but equivalent concerns relate to the environments within which hardware, documentation etc. is tested. Testing electrical equipment in a protected environment, with smoothed power supply and dust, temperature and humidity control will not be a valuable test if the equipment will be used in a normal office.

Test Environments

Test environments must be actively maintained and protected. For any significant change to a service, the question should be asked (as for continued relevance of the continuity and capacity plans, should the change be

accepted and implemented): 'If this change goes ahead, will there need to be a consequential impact to the test data?' If so, it may involve updating test data as part of the change, and the dependency of a service, or service element, on test data or test environment will be evident from the SKMS, via records and relationships held within the CMS. Outcomes from this question include:

- Consequential updating of the test data

- A new separate set of data or new test environment, since the original is still required for other services

- Redundancy of the test data or environment - since the change will allow testing within another existing test environment, with or without modification to that data/environment (this may in fact be the justification behind a perfective change - to reduce testing costs)

- Acceptance that a lower level of testing will be accepted since the test data/environment cannot be updated to deliver equivalent test coverage for the changed service.

Maintenance of test data should be an active exercise and should address relevant issues including:

- Separation from any live data, and steps to ensure that it cannot be mistaken for live data when being used, and vice versa (there are many real-life examples of live data being copied and used as test data and being the basis for business decisions)

- Data protection regulations - when live data is used to generate a test database, if information can be traced to individuals it may well be covered by data protection legislation that, for example, may forbid its transportation between countries

- Backup of test data, and restoration to a known baseline for enabling repeatable testing; this also applies to initiation conditions for hardware tests that should be baselined.

An established test database can also be used as a safe and realistic training environment for a service

4.5.8 Key Performance Indicators . And Metrics

4.5.8.1 Primary (of Value To The Business/customers)

he business will judge testing performance as a component of the Service Design and transition stages .f the service lifecycle. Specifically, the effectiveness of testing in delivering to the business can be judged through:

- Early validation that the service will deliver the predicted value that enables early correction

- Reduction in the impact of incidents and errors in live environment that are attributable to newly transitioned services

- More effective use of resource and involvement from the customer/business

- Reduced delays in testing that impact the business

- Increased mutual understanding of the new or changed service

- Clear understanding of roles and responsibilities associated with the new or changed service between the customers, users and service provider

- Cost and resources required from user and customer involvement (e.g. user acceptance testing).

The business will also be concerned with the economy of the testing process - in terms of:

- Test planning, preparation, execution rates

- Incident, problem, event rates

- Issue and risk rate

- Problem resolution rate

- Resolution effectiveness rate

- Stage containment - analysis by service lifecycle stage

- Repair effort percentage

- Problems and changes by service asset or CI type

- Late changes by service lifecycle stage

- Inspection effectiveness percentage

- Residual risk percentage

- Inspection and testing return on investment (ROI)

- Cost of unplanned and unbudgeted overtime to the business

- Cost of fixing errors in live operation compared to fixing errors early in the lifecycle (e.g. the costs can be £10 to fix an error in Service Design and £10,000 to fix the error if it reaches production)

- Operational cost improvements associated with reducing errors in new or changed services.

4.5.8.2 Secondary (internal)

The testing function and process itself must strive to be effective and efficient, and so measures of its effectiveness and costs need to be taken. These include:

- Effort and cost to set up a testing environment

- Effort required to find defects - i.e. number of defects (by significance, type, category etc.) compared with testing resource applied

- Reduction of repeat errors - feedback from testing ensures that corrective action within design and transition (through CSI) prevents mistakes from being repeated in subsequent releases or services

- Reduced error/defect rate in later testing stages or production

- Re-use of testing data

- Percentage incidents linked to errors detected during testing and released into live

- Percentage errors at each lifecycle stage

- Number and percentage of errors that could have been discovered in testing