|

| 4.1PLAN/SUPPORT | 4.2CHANGE | 4.3ASSET/CONFIG | 4.4RELEASE/DEPLOY | 4.5 VALIDATE/TEST | 4.6EVALUATE | 4.7KNOWLEDGE |

| 1Introduction | 2Serv. Mgmt. | 3Principles | 4Processes | 5Activities | 6Organization | 7Consideration | 8Implementation | 9Issues | AAppendeces |

|

| 4.1PLAN/SUPPORT | 4.2CHANGE | 4.3ASSET/CONFIG | 4.4RELEASE/DEPLOY | 4.5 VALIDATE/TEST | 4.6EVALUATE | 4.7KNOWLEDGE |

The goal of evaluation is to set stakeholder expectations correctly and provide effective and accurate information to Change Management to make sure changes that adversely affect service capability and introduce risk are not transitioned unchecked.

The objective is to:

Principles

The following principles shall guide the execution evaluation process:

| Term | Function/Means |

| Service change | A change to an existing service or the introduction of a new service; the service change arrives into service evaluation and qualification in the form of a Request for Change (RFC) from Change Management |

| Service Design package | Defines the service and provides a plan of service changes for the next period (e.g. the next 12 months). Of particular interest to service evaluation is the Acceptance Criteria and the predicted performance of a service with respect to a service change |

| Performanc | The utilities and warranties of a service |

| Performance mode | A representation of the performance of a service |

| Predicted performance | The expected performance of a service following a service change |

| Actual performance | The performance achieved following a service change |

| Deviations report | The difference between predicted and actual performance |

| Risk | A function of the likelihood and negative impact of a service not performing as expected |

| Countermeasures | The mitigation that is implemented to reduce risk |

| Test plan and results | The test plan is a response to an impact assessment of the proposed service change. Typically the plan will specify how the change will be tested; what records will result from testing and where they will be stored; who will approve the change; and how it will be ensured that the change and the service(s) it affects will remain stable over time. The test plan may include a qualification plan and a validation plan if the change affects a regulated environment. The results represent the actual performance following implementation of the change |

| Residual risk | The remaining risk after countermeasures have been deployed |

| Service capability | The ability of a service to perform as required |

| Capacity | An organization's ability to maintain service capability under any predefined circumstances |

| Constraint | Limits on an organization's capacity |

| Resource | The normal requirements of an organization to maintain service capability |

| Evaluation plan | The outcome of the evaluation planning exercise |

| Evaluation report | A report generated by the evaluation function, which is passed to Change Management and which comprises:

|

| Table 4.13 Key terms that apply to the service evaluation process | |

|

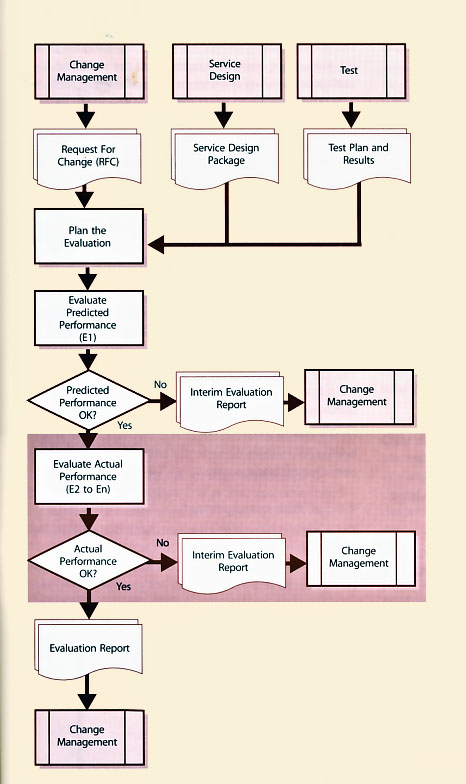

| Figure 4.34 Evaluation process |

Generally speaking we would expect the intended effects of a change to be beneficial. The unintended effects are harder to predict, often not seen even after the service change is implemented, and frequently ignored. Additionally, they will not always be beneficial, for example in terms of impact on other services, impact on customers and users of the service, and network overloading. Intended effects of a change should match the Acceptance Criteria. Unintended effects are often not seen until pilot stage or even once in production; they are difficult to measure and very often not beneficial to the business.

The change documentation should make clear what the intended effect of the change will be and specific measures that should be used to determine effectiveness of that change. If they are in any way unclear or ambiguous the evaluation should cease and a recommendation not to proceed should be forwarded to Change Management. Even some deliberately designed changes may be detrimental to some elements of the service. For example, the introduction of SOX-compliant procedures, which, while delivering the benefit of legal compliance, introduce extra work steps and costs.

| Factor | Evaluation of Service Design |

| S - Service provider capability | The ability of a service provider or service unit to perform as required. |

| T - Tolerance | The ability or capacity of a service to absorb the service change or release. |

| 0 - Organizational setting | The ability of an organization to accept the proposed change. For example, is appropriate access available for the implementation team? Have all existing services that would be affected by the change been updated to ensure smooth transition? |

| R - Resources | The availability of appropriately skilled and knowledgeable people, sufficient finances, infrastructure, applications and other resources necessary to run the service following transition. |

| M - Modelling and measurement | The extent to which the predictions of behaviour generated from the model match the actual behaviour of the new or changed service. |

| P - People | The people within a system and the effect of change on them. |

| U - Use Will the service be fit for use? | The ability to deliver the warranties, e.g. continuously available, is there enough capacity, will it be secure enough? |

| P - Purpose | Will the new or changed service be fit for purpose? Can the required performance be supported? Will the constraints be removed as planned? |

| Table 4.14 Factors for considering the effects of a service change | |

The interim evaluation report includes the outcome of the risk assessment and/or the outcome of the predicted performance versus Acceptance Criteria, together with a recommendation to reject the service change in its current form.

Evaluation activities cease at this point pending a decision from Change Management.

The interim evaluation report includes the outcome of the risk assessment and/or the outcome of the actual performance versus Acceptance Criteria, together with a recommendation to remediate the service change. Evaluation activities cease at this point pending a decision from Change Management.

A risk occurs when a threat can exploit a weakness. The likelihood of threats exploiting a weakness and the impact if they do, are the fundamental factors in determining risk.

The risk management formula is simple but very powerful:

| Risk = Likelihood x Impact |

Obviously, the introduction of new threats and weaknesses increases the likelihood of a threat exploiting a weakness. Placing greater dependence on a service or component increases the impact if an existing threat exploits an existing weakness within the service. These are just a couple of examples of how risk may increase as a result of a service change.

It is a clear requirement that a proposed service change must assess the existing risks within a service and the predicted risks following implementation of the change.

If the risk level has increased then the second stage of risk management is used to mitigate the risk. In the examples given above mitigation may include steps to eliminate a threat or weakness and using disaster recovery and backup techniques to increase the resilience of a service on which the organization has become more dependent.

Following mitigation the risk level is re-assessed and compared with the original. This second assessment and any subsequent assessments are in effect determining residual risk - the risk that remains after mitigation. Assessment of residual risk and associated mitigation continues to cycle until risk is managed down to an acceptable level.

The guiding principle here is that either the initial risk assessment or any residual risk level is equal to or less than the original risk prior to the service change. If this is not the case then evaluation will recommend rejection of proposed service change, or back out of an implemented service change.

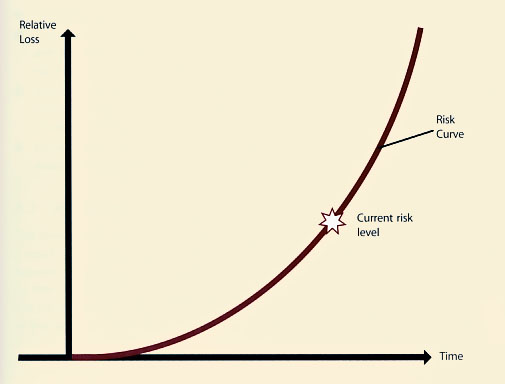

The approach to risk representation recommended here takes a fundamentally different approach. Building on the work of Drake (2005a, 2005b) this approach recognizes that risks almost always grow exponentially over time if left unmanaged, and that a risk that will not cause a loss probably is not worth worrying about too much.

It is therefore proposed that a stronger risk representation is as shown in Figure 4.35. Principally, this representation is intended to promote debate and agreement by stakeholders: is the risk positioned correctly in terms of time and potential or actual loss; could mitigation have been deployed later (e.g. more economically); should it have been deployed earlier (e.g. better protection); etc.

Deviations - Predicted Vs Actual Performance

Once the service change passes the evaluation of predicted performance and actual performance, essentially as standalone evaluations, a comparison of the two is carried out. To have reached this point it will have been determined that predicted performance and actual performance are acceptable, and that there are no unacceptable risks. The output of this activity is a deviations report. For each factor in Table 4.14 the report states the extent of any deviation between predicted and actual performance.

|

| Figure 4.35 Example risk profile |

Test Plan And Results

The testing function provides the means for determining the actual performance of the service following implementation of a service change. Test provides the service evaluation function with the test plan and a report on the results of any testing. The actual results are also made available to service evaluation. These are evaluated and used as described in section 4.6.5.8.

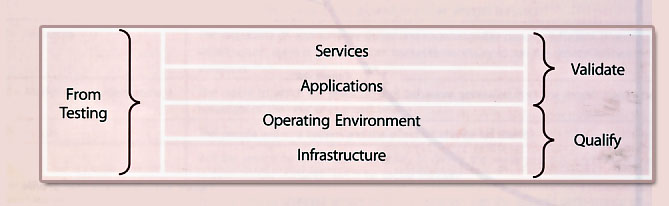

In some circumstances it is necessary to provide a statement of qualification and/or validation status following a change. This takes place in regulated environments such as pharmaceuticals and defence. The context for these activities is shown in Figure 4.36.

|

| Figure 4.36 Context for qualification and validation activities |

The inputs to these activities are the qualification plan and results and/or validation plan and results. The evaluation process ensures that the results meet the requirements of the plans. A qualification and/or validation statement is provided as output.

Deviations Report

The difference between predicted and actual performance following the implementation of a change.

A qualification statement (if appropriate)

Following review of qualification test results and the qualification plan, a statement of whether or not the change has left the service in a state whereby it could not be qualified.

A validation statement (if appropriate)

Following review of validation test results and the validation plan, a statement of whether or not the change has left the service in a state whereby it could not be validated.

A Recommendation

Based on the other factors within the evaluation report, a recommendation to Change Management to accept or reject the change:

Inputs:

Outputs:

The internal KPIs include:

|

|